Architectural Considerations & Optimization Best Practices

The integration of high-performance computing (HPC) in the Cloud is not just about scaling up computational power; it’s about architecting systems that can efficiently manage and process the vast amounts of data generated in Biotech and Pharma research. For instance, in drug discovery and genomic sequencing, researchers deal with datasets that are not just large but also complex, requiring sophisticated computational approaches.

However, designing an effective HPC Cloud environment comes with challenges. It requires a deep understanding of both the computational requirements of specific workflows and the capabilities of Cloud-based HPC solutions. For example, tasks like ultra-large library docking in drug discovery or complex genomic analyses demand not just high computational power but also specific types of processing cores and optimized memory management.

In addition, the cost-efficiency of Cloud-based HPC is a critical factor. It’s essential to balance the computational needs with the financial implications, ensuring that the resources are utilized optimally without unnecessary expenditure.

Understanding the need for HPC in Bio-IT

In Life Sciences R&D, the computational demands require sophisticated computational capabilities to extract meaningful insights. HPC plays a pivotal role by enabling rapid processing and analysis of extensive datasets. For example, HPC facilitates multi-omics data integration, combining genomics with transcriptomics and metabolomics for a comprehensive understanding of biological processes and disease. It also aids in developing patient-specific simulation models, such as detailed heart or brain models, which are pivotal for personalized medicine.

Furthermore, HPC is instrumental in conducting large-scale epidemiological studies, helping to track disease patterns and health outcomes, which are essential for effective public health interventions. In drug discovery, HPC accelerates not only ultra-large library docking but also chemical informatics and materials science, fostering the development of new compounds and drug delivery systems.

This computational power is essential not only for advancing research but also for responding swiftly in critical situations like pandemics. Additionally, HPC can integrate environmental and social data, enhancing disease outbreak models and public health trends. The advanced machine learning models powered by HPC, such as deep neural networks, are transforming the analytical capabilities of researchers.

HPC’s role in handling complex data also involves accuracy and the ability to manage diverse data types. Biotech and Pharma R&D often deal with heterogeneous data, including structured and unstructured data from various sources. The advanced data visualization and user interface capabilities supported by HPC allow for intricate data patterns to be revealed, providing deeper insights into research data.

HPC is also key in creating collaboration and data-sharing platforms that enhance the collective research efforts of scientists, clinicians, and patients globally. HPC systems are adept at integrating and analyzing these diverse datasets, providing a comprehensive view essential for informed decision-making in research and development.

Architectural Considerations for HPC in the Cloud

In order to construct an HPC environment that is both robust and adaptable, Life Sciences organizations must carefully consider several key architectural components:

- Scalability and flexibility: Central to the design of Cloud-based HPC systems is the ability to scale resources in response to the varying intensity of computational tasks. This flexibility is essential for efficiently managing workloads, whether they involve tasks like complex protein-structure modeling, in-depth patient data analytics, real-time health monitoring systems, or even advanced imaging diagnostics.

- Compute power: The computational heart of HPC is compute power, which must be tailored to the specific needs of Bio-IT tasks. The choice between CPUs, GPUs, or a combination of both should be aligned with the nature of the computational work, such as parallel processing for molecular modeling or intensive data analysis.

- Storage solutions: Given the large and complex nature of datasets in Bio-IT, storage solutions must be robust and agile. They should provide not only ample capacity but also fast access to data, ensuring that storage does not become a bottleneck in high-speed computational processes.

- Network architecture: A strong and efficient network is vital for Cloud-based HPC, facilitating quick and reliable data transfer. This is especially important in collaborative research environments, where data sharing and synchronization across different teams and locations are common.

- Integration with existing infrastructure: Many Bio-IT environments operate within a hybrid model, combining Cloud resources with on-premises systems. The architectural design must ensure a seamless integration of these environments, maintaining consistent efficiency and data integrity across the computational ecosystem.

Optimizing HPC Cloud environments

HPC in the Cloud is as crucial as its initial setup. This optimization involves strategic approaches to common challenges like data transfer bottlenecks and latency issues.

Efficiently managing computational tasks is key. This involves prioritizing workloads based on urgency and complexity and dynamically allocating resources to match these priorities. For instance, urgent drug discovery simulations might take precedence over routine data analyses, requiring a reallocation of computational resources.

But efficiency isn’t just about speed and cost; it’s also about smooth data travel. Optimizing the network to prevent data transfer bottlenecks and reducing latency ensures that data flows freely and swiftly, especially in collaborative projects that span different locations.

In sensitive Bio-IT environments, maintaining high security and compliance standards is another non-negotiable. Regular security audits, adherence to data protection regulations, and implementing robust encryption methods are essential practices.

Maximizing Bio-IT potential with HPC in the Cloud

A well-architected HPC environment in the Cloud is pivotal for advancing research and development in the Biotech and Pharma industries.

By effectively planning, considering architectural needs, and continuously optimizing the setup, organizations can harness the full potential of HPC. This not only accelerates computational workflows but also ensures these processes are cost-effective and secure.

Ready to optimize your HPC/Cloud environment for maximum efficiency and impact? Discover how RCH can guide you through this transformative journey.

Sources:

https://www.rchsolutions.com/high-performance-computing/

https://www.nature.com/articles/s41586-023-05905-z

https://www.rchsolutions.com/ai-aided-drug-discovery-and-the-future-of-biopharma/

https://www.nature.com/articles/s41596-021-00659-2

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC10318494/

https://pubmed.ncbi.nlm.nih.gov/37702944/

https://link.springer.com/article/10.1007/s42514-021-00081-w

https://www.rchsolutions.com/resource/scaling-your-hpc-environment-in-a-cloud-first-world/ https://www.rchsolutions.com/how-high-performance-computing-will-help-scientists-get-ahead-of-the-next-pandemic/ https://www.scientific-computing.com/analysis-opinion/how-can-hpc-help-pharma-rd

https://www.rchsolutions.com/storage-wars-cloud-vs-on-prem/

https://www.rchsolutions.com/hpc-migration-in-the-cloud/

https://www.mdpi.com/2076-3417/13/12/7082

https://www.rchsolutions.com/resource/hpc-migration-to-the-cloud/

Considerations for Enhancing Your In-house Bio-IT Team

As research becomes increasingly data-driven, the need for a robust IT infrastructure, coupled with a team that can navigate the complexities of bioinformatics, is vital to progress. But what happens when your in-house Bio-IT services team encounters challenges beyond their capacity or expertise?

This is where strategic augmentation comes into play. It’s not just a solution but a catalyst for innovation and growth by addressing skill gaps and fostering collaboration for enhanced research outcomes.

Assessing in-house Bio-IT capabilities

The pace of innovation demands an agile team and diverse expertise. A thorough evaluation of your in-house Bio-IT team’s capabilities is the foundational step in this process. It involves a critical analysis of strengths and weaknesses, identifying both skill gaps and bottlenecks, and understanding the nuances of your team’s ability to handle the unique demands of scientific research.

For startup and emerging Biotech organizations, operational pain points can significantly alter the trajectory of research and impede the desired pace of scientific advancement. A comprehensive blueprint that includes team design, resource allocation, technology infrastructure, and workflows is essential to realize an optimal, scalable, and sustainable Bio-IT vision.

Traditional models of sourcing tactical support often clash with these needs, emphasizing the necessity of a Bio-IT Thought Partner that transcends typical staff augmentation and offers specialized experience and a willingness to challenge assumptions.

Identifying skill gaps and emerging needs

Before sourcing the right resources to support our team, it’s essential to identify where the gaps lie. Start by:

- Prioritizing needs. While prioritizing “everything” is often the goal, it’s also the fastest way to get nothing done. Evaluate the overarching goals of your company and team, and decide what skills and efforts represent support mission-critical, versus “nice to have” efforts.

- Auditing current capabilities: Understand the strengths and weaknesses of your current team. Are they adept at handling large-scale genomic data but struggle with real-time data processing? Recognizing these nuances is the first step.

- Project forecasting: Consider upcoming projects and their specific IT demands. Will there be a need for advanced machine learning techniques or Cloud-based solutions that your team isn’t familiar with?

- Continuous training: While it’s essential to identify gaps, it’s equally crucial to invest in continuous training for your in-house team. This ensures that they remain updated with the latest in the field, reducing the skill gap over time.

Evaluating external options

Once you’ve identified the gaps, the next step is to find the right partners to fill them. Here’s how:

- Specialized expertise: Look for partners who offer specialized expertise that complements your in-house team. For instance, if your team excels in data storage but lacks in data analytics, find a partner who can bridge that gap.

- Flexibility: The world of Life Sciences is dynamic. Opt for partners who offer flexibility in terms of scaling up or down based on project requirements.

- Cultural fit: Beyond technical expertise, select an external team that aligns with your company’s culture and values. This supports smoother collaboration and integration.

Fostering collaboration for optimal research outcomes

Merging in-house and external teams can be challenging. However, with the right strategies, collaboration can lead to unparalleled research outcomes.

- Open communication: Establish clear communication channels. Regular check-ins, updates, and feedback loops help keep everyone on the same page.

- Define roles: Clearly define the roles and responsibilities of each team member, both in-house and external. This prevents overlaps and ensures that every aspect of the project is adequately addressed.

- Create a shared vision: Make sure the entire team, irrespective of their role, understands the end goal. A shared vision fosters unity and drives everyone towards a common objective.

- Leverage strengths: Recognize and leverage the strengths of each team member. If a member of the external team has a particular expertise, position them in a role that maximizes that strength.

Making the right choice

For IT professionals and decision-makers in Pharma, Biotech, and Life Sciences, the decision to augment the in-house Bio-IT team is not just about filling gaps; it’s about propelling research to new heights, ensuring that the IT infrastructure is not just supportive but also transformative.

When making this decision, consider the long-term implications. While immediate project needs are essential, think about how this augmentation will serve your organization in the years to come. Will it foster innovation? Will it position you as a leader in the field? These are the questions that will guide you toward the right choice.

Life Science research outcomes can change the trajectory of human health, so there’s no room for compromise. Augmenting your in-house Bio-IT team is a commitment to excellence. It’s about recognizing that while your team is formidable, the right partners can make them invincible. Strength comes from recognizing when to seek external expertise.

Pick the right team to supplement yours. Talk to RCH Solutions today.

Sources:

https://www.rchsolutions.com/harnessing-collaboration/

https://www.rchsolutions.com/press-release-rch-introduces-solution-offering-designed-to-help-biotech-startups/

https://www.rchsolutions.com/what-is-a-bio-it-thought-partner-and-why-do-you-need-one/

https://www.rchsolutions.com/our-people-are-our-key-point-of-difference/

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3652225/

https://www.forbes.com/sites/forbesbusinesscouncil/2023/01/10/three-best-practices-when-outsourcing-in-a-life-science-company/?sh=589b57a55575

https://www.cio.com/article/475353/avoiding-the-catch-22-of-it-outsourcing.html

The Power of AWS Certifications in Cloud Strategy: Unleashing Expertise for Success

Life Sciences organizations engaged in drug discovery, development, and commercialization grapple with intricate challenges. The quest for novel therapeutics demands extensive research, vast datasets, and the integration of multifaceted processes. Managing and analyzing this wealth of data, ensuring compliance with stringent regulations, and streamlining collaboration across global teams are hurdles that demand innovative solutions.

Moreover, the timeline from initial discovery to commercialization is often lengthy, consuming precious time and resources. To overcome these challenges and stay competitive, Life Sciences organizations must harness cutting-edge technologies, optimize data workflows, and maintain compliance without compromise.

Amid these complexities, Amazon Web Services (AWS) emerges as a game-changing ally. AWS’s industry-leading cloud platform includes specialized services tailored to the unique needs of Life Sciences and empowers organizations to:

- Accelerate Research: AWS’s scalable infrastructure facilitates high-performance computing (HPC), enabling faster data analysis, molecular modeling, and genomics research. This acceleration is pivotal in expediting drug discovery.

- Enhance Data Management: With AWS, Life Sciences organizations can store, process, and analyze massive datasets securely. AWS’s data management solutions ensure data integrity, compliance, and accessibility.

- Optimize Collaboration: AWS provides the tools and environment for seamless collaboration among dispersed research teams. Researchers can collaborate in real time, enhancing efficiency and innovation.

- Ensure Security and Compliance: AWS offers robust security measures and compliance certifications specific to the Life Sciences industry, ensuring that sensitive data is protected and regulatory requirements are met.

While AWS holds immense potential, realizing its benefits requires expertise. This is where a trusted AWS partner becomes invaluable. An experienced partner not only understands the intricacies of AWS but also comprehends the unique challenges Life Sciences organizations face.

Partnering with a trusted AWS expert offers:

- Strategic Guidance: A seasoned partner can tailor AWS solutions to align with the Life Sciences sector’s specific goals and regulatory constraints, ensuring a seamless fit.

- Efficient Implementation: AWS experts can expedite the deployment of Cloud solutions, minimizing downtime and maximizing productivity.

- Ongoing Support: Beyond implementation, a trusted partner offers continuous support, ensuring that AWS solutions evolve with the organization’s needs.

- Compliance Assurance: With deep knowledge of industry regulations, a trusted partner can help navigate the compliance landscape, reducing risk and ensuring adherence.

Certified AWS engineers bring transformative expertise to cloud strategy and data architecture, propelling organizations toward unprecedented success.

AWS Certifications: What They Mean for Organizations

AWS offers a comprehensive suite of globally recognized certifications, each representing a distinct level of proficiency in managing AWS Cloud technologies. These certifications are not just badges; they signify a commitment to excellence and a deep understanding of Cloud infrastructure.

In fact, studies show that professionals who pursue AWS certification are faster, more productive troubleshooters than non-certified employees. For research and development IT teams, the AWS certifications held by their members translate into powerful advantages. These certifications unlock the ability to harness AWS’s cloud capabilities for driving innovation, efficiency, and cost-effectiveness in data-driven processes.

Meet RCH’s Certified AWS Experts: Your Key to Advanced Proficiency

At RCH, we’re proud to prioritize professional and technical skill development across our team, and proudly recognize our AWS-certified professionals:

- Mohammad Taaha, AWS Solutions Architect Professional

- Yogesh Phulke, AWS Solutions Architect Professional

- Michael Moore, AWS DevOps Engineering Professional

- Abdul Samad, AWS Solutions Architect Associate

- Baris Bilgin, AWS Solutions Architect Associate

- Isaac Adanyeguh, AWS Solutions Architect Associate

- Matthew Jaeger, AWS Cloud Practitioner & SysOps Administrator

- Lyndsay Frank, AWS Cloud Practitioner

- Dennis Runner, AWS Cloud Practitioner

- Burcu Dikeç, AWS Cloud Practitioner

When you partner with RCH and our AWS-certified experts, you gain access to technical knowledge and tap into a wealth of experience, innovation, and problem-solving capabilities. Advanced proficiency in AWS certifications means that our team can tackle even the most complex Cloud challenges with confidence and precision.

Our certified AWS experts don’t just deploy Cloud solutions; they architect them with your unique business needs in mind. They optimize for efficiency, scalability, and cost-effectiveness, ensuring your Cloud strategy aligns seamlessly with your organizational goals, including many of the following needs:

- Creating extensive solutions for AWS EC2 with multiple frameworks (EBS, ELB, SSL, Security Groups and IAM), as well as RDS, CloudFormation, Route 53, CloudWatch, CloudFront, CloudTrail, S3, Glue, and Direct Connect.

- Deploying high-performance computing (HPC) clusters on AWS using Parallel Cluster running the SGE scheduler

- Automating operational tasks, including software configuration, server scaling and deployments, and database setups in multiple AWS Cloud environments using modern application and configuration management tools (e.g., CloudFormation and Ansible).

- Working closely with clients to design networks, systems, and storage environments that effectively reflect their business needs, security, and service level requirements.

- Architecting and migrating data from on-premises solutions (Isilon) to AWS (S3 & Glacier) using industry-standard tools (Storage Gateway, Snowball, CLI tools, Datasync, among others).

- Designing and deploying plans to remediate accounts affected by IP overlap

All of these tasks have boosted the efficiency of data-oriented processes for clients and made them better able to capitalize on new technologies and workflows.

The Value of Working with AWS Certified Partners

In an era where data and technology are the cornerstones of success, working with a partner who embodies advanced proficiency in AWS is not just a strategic choice—it’s a game-changing move. At RCH Solutions, we leverage the power of AWS certifications to propel your organization toward unparalleled success in the cloud landscape.

Learn how RCH can support your Cloud strategy, or CloudOps needs today.

What You Should Expect From a Specialized Bio-IT Partner

Because “good” is no longer good enough, see what to look for, and what to avoid, in a specialized Bio-IT partner.

Gone are the days where selecting a strategic Bio-IT partner for your emerging biotech or pharma was a linear or general IT challenge. Where good was good enough because business models were less complex and systems were standardized and simple.

Today, opportunities and discoveries that can lead to significant breakthroughs now emerge faster than ever. And your scientists need sustainable and efficient computing solutions that enable them to focus on science, at the speed and efficiency that’s necessary in today’s world of medical innovation. The value your Biot-IT partner adds can be a missing link to unlocking and accelerating your organization’s discovery and development goals … or the weight that’s holding you back.

Read on to learn 5 important qualities that you should not only expect but demand from your Bio-IT partner. As well as the red flags that may signal you’re working with the wrong one.

Subject Matter Expertise & Life Science Mastery vs. General IT Expertise & Experience

Your organization needs a Bio-IT partner with the ability to bridge the gap between science and IT, or Sci-T as we call it, and this is only possible when their unique specialization in the life sciences is backed by their proven subject matter expertise in the field. This means your partner should be up-to-date on the latest technologies but, more importantly, have demonstrable knowledge about your business’ unique needs in the landscape in which it’s operating. And be able to provide working recommendations and solutions to get you where you want—and need —to be. That is what separates the IT generalists from subject matter and life science experts.

Vendor Agnostic vs. Vendor Follower

Technologies and programs that suit your biotech or pharma’s evolving needs are different from organization to organization. Your firm has a highly unique position and individualized objectives that require solutions that are just as bespoke —and we get that. But unfortunately, many Bio-IT partners still build their recommendation based on existing and mutually beneficial supplier relationships that they prioritize, alongside their margins, even when significantly better solutions might be available. And that’s why seeking a strategic partner that is vendor agnostic is so critical. The right Bio-IT partner will look out for your best interest and focus on solutions that propel you to your desired outcomes most efficiently and effectively, ultimately accelerating your discovery.

Collaborative and Thought Partner vs. Order Taker

Anyone can be an order taker. But your organization doesn’t always know what they want to—or should—order. And that is where a collaborative and strategic partner comes in, and can be the difference maker. Your strategic Bio-IT partner should spark creativity, drive innovation, and ultimately cultivate business success. They’ll dive deep into your organizational needs to intimately understand what will propel you to your desired outcomes, and recommend agnostic industry-leading solutions that will get you there. Most importantly, they work on effectively implementing them to streamline systems and processes to create a foundation for sustainability and scalability, which is where the game-changing transformation occurs for your organization.

Individualized and Inventive vs. One-Size-Fits-All

A strategic Bio-ITpartner needs to understand that success in the life sciences depends on being able to collect, correlate and leverage data to uphold a competitive advantage. But no two organizations are the same, share the same objectives, or have the same considerations and dependencies for a compute environment.

Rather than doing more of the same, your Bio-IT partner should view your organization through your individualized lens and seek fit-for-purpose paths that align to your unique challenges and needs. And because they understand both the business and technology landscapes, they should ask probing questions, and have the right expertise to push beyond the surface, and introduce novel solutions to legacy issues, routinely. The result is a service that helps you accelerate the development of your next scientific breakthrough.

Dynamic and Modern Business Acumen vs. Centralized Business Processes

With the pandemic came new business and work processes and procedures, and employees and offices are no longer centralized like they once were. Or maybe yours never was. Either way, the right Bio-IT partner needs to understand the unique technical requirements and the volume of data and information that is now exchanged between employees, partners, and customers globally, and at once. And solutions need to work the same, if not better, than if teams were sitting alongside each other in a physical office. So, the right strategic partner must have modern business acumen and the dynamic expertise that’s necessary to build and effectively implement solutions that enable teams to work effectively and efficiently from anywhere in the world.

Your Bio-IT Partner Can Make or Break Success

We’ll say it again – good is not good enough. And frankly, just good enough is not up to par, either. It takes a uniquely qualified, seasoned and modern Bio-IT partner that understands that the success—and the failure—of a life science company hinges on its ability to innovate, and that your infrastructure is the foundation upon which that ability, and your ability to scale, sits. They must understand which types of solutions work best for each of your business pain points and opportunities, including those that still might be undiscovered. But most importantly, valuable partners can drive and effectively implement necessary changes that enable and position life science companies to reach and surpass their discovery goals. And that’s what it takes in today’s fast-paced world of medical innovation.

So, if you feel like your Bio-IT partner might be underdelivering in any of our top 5 areas, then it might be time to find one that can—and will—truly help you leverage scientific computing innovation to reach your goals.

Get in touch with our team today.

The Journey to 26.2My oldest daughter, Sophie, completed her first New York City Marathon this year. I’m proud of her for so many reasons and watching her conquer 26.2 miles is a memory I’ll never forget.

Since attending the race, I’ve been thinking a lot about the endurance, determination, and, frankly, the planning that’s required when running a marathon. There are many parallels with running a business to scale.

Often, organizations in growth mode make the mistake of being so focused on the finish line, they lose sight of the many important checkpoints along the way. In doing so their determination may remain strong, but their endurance is likely to suffer.

After nearly 30 years in leadership, ask me how I know ….

Looking Toward the Future One Checkpoint at a Time

Today, reflecting on the race we’ve run here at RCH in 2022, I’m so immensely proud of the distance we’ve covered. Seeing our revenue increase nearly 30% year over year has been exceedingly rewarding. More exciting, however, has been watching our team grow and respond in the face of increasing demand from our customers. In 2022 alone, RCH’s workforce grew by more than 70%. And the best part? We’re not slowing down; not only are we continuously developing and nurturing the growth of our existing team, we’re also actively recruiting for additional talent now and into the New Year.

Much like the marathon runner, we had an opportunity to test ourselves. Working within a biotech and pharma industry that continues to evolve, facing consolidation, budget cuts, exploding innovation, resource constraints, and more, we encountered many important milestones on our path. And importantly, gave ourselves the opportunity to adjust our plan along the way.

And here’s what we know:

Large Pharmas and Emerging Life Sciences Need On-Going R&D IT Run Services

For years, RCH has been the go-to bio-IT partner for R&D teams within many of the world’s top pharmas, providing our specialized Sci-T Managed Services offering based on our experience and understanding of where science and IT intersect. And we’ve been able to because organizations of that scale have a very real need for ongoing, “routine” science-focused IT operational support. The R&D flavor of “keeping the lights on” for their powerful and complex scientific computing environments. We’ve earned the right to be their first call because, frankly, we’re damn good at helping R&D teams and their IT counterparts move science forward without disruption or delay. It’s a role we’re comfortable in, and a role we like.

But there was also a need to shift.

Emerging Start-Ups Also Need Foundational and Project-Based Consulting & Execution

There’s never been a better time to be an emerging biopharma. The opportunities are monumental and the science is some of the best and most exciting I’ve ever seen. But not without challenge.

With limited Bio-IT resources, scientist-heavy teams, and often a compute ecosystem that’s not optimized for performance and scale, we’ve found these firms to have a pressing need for advice before execution. The need for a trusted advisor to help them learn what they don’t even know could be possible. To turn their compute environment and tech stack into a true asset that will not only help them achieve their scientific goals, but also their business goals as they eye the future and what it may hold.

However, although they’re racing toward discovery, development and products, with speed as the top goal, they still need to hit their checkpoints.

This year, RCH has been able to expand beyond our reputation as the “people who just get it done” (though we always knew we were capable of doing much, much more) with ease. Leaning on our experience serving some of the biggest and best teams in the biz, we built out a comprehensive menu of outcome-based Professional Services to meet the needs of quick moving teams like I just described.

Some of those services include assessment and roadmap development, Cloud infrastructure architecture and execution, and a range of what we call “accelerators,” which help teams reach specific goals related to:

- Data and workflow management

- Platform Dev/Ops and automation

- HPC and computing at scale

- AI integration and advancement

- Front-end development and visualization

- Instrumentation and lab support, and

- Specialty scientific application tuning and optimization, among others

And we haven’t stopped there. Several of our recent and forthcoming service engagements include support that goes well beyond R&D, focusing on Clinical and Regulatory Services. It’s a space we’ve been eyeing for a while now, and the time is now right to officially push in to this new territory. More to come on that soon ….

Why does this matter? Well, because stopping to reassess and expand how we deliver services—it’s what propelled RCH across one finish line and into the next race. And we did it with many wonderful customers, partners, and supporters along the way.

So, as we prepare to close one year and look toward the next, I offer you the following:

Pushing forward too quickly can, paradoxically, be the formula for slowed discovery, development and clinical progress which can lead to stalled outcomes and/or costly missteps. Though innovation requires speed and usually feels like a sprint, scale sometimes warrants a slower burn.

When assessing your path to the best outcomes, consider the marathon runner. Determine a winning pace, understand what must be done to sustain it, and make sure you’re solutioned for long-term success.

And remember, the best competitor is not always the one who crosses every finish line first—but then again, I may be a little biased.

📷: Sophia Riener, NYC Marathon – November 2022

Cryo-Em brings a wealth of potential to drug research. But first, you’ll need to build an infrastructure to support large-scale data movement.

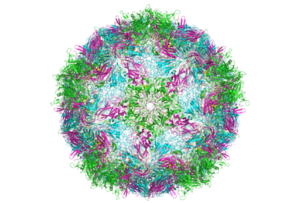

The 2017 Nobel prize in chemistry marked a new era for scientific research. Three scientists earned the honor with their introduction of cryo-electron microscopy—an instrument that delivers high-resolution imagery of molecule structures. With a better view of nucleic acids, proteins, and other biomolecules, new doors have opened for scientists to discover and develop new medications.

However, implementing cryo-electron microscopy isn’t without its challenges. Most notably, the instrument captures very large datasets that require unique considerations in terms of where they’re stored and how they’re used. The level of complexity and the distinct challenges cryo-EM presents requires the support of a highly experienced Bio-IT partner, like RCH, who are actively supporting large and emerging organizations’ with their cryo-EM implementation and management. But let’s jump into the basics first.

How Our Customers Are Using Cryo-Electron Microscopy

Cryo-electron microscopy (cryo-EM) is revolutionizing biology and chemistry. Our customers are using it to analyze the structures of proteins and other biomolecules with a greater degree of accuracy and speed compared to other methods.

In the past, scientists have used X-ray diffraction to get high-resolution images of molecules. But in order to receive these images, the molecules first need to be crystallized. This poses two problems: many proteins won’t crystallize at all. And in those that do, the crystallization can often change the structure of the molecule, which means the imagery won’t be accurate.

Cryo-EM provides a better alternative because it doesn’t require crystallization. What’s more, scientists can gain a clearer view of how molecules move and interact with each other—something that’s extremely hard to do using crystallization.

Cryo-EM can also study larger proteins, complexes of molecules, and membrane-bound receptors. Using NMR to achieve the same results is challenging, as nuclear magnetic resonance (NMR) is typically limited to smaller proteins.

Because cryo-EM can give such detailed, accurate images of biomolecules, its use is being explored in the field of drug discovery and development. However, given its $5 million price tag and complex data outputs, it’s essential for labs considering cryo-EM to first have the proper infrastructure in place, including expert support, to avoid it becoming a sunken cost.

The Challenges We’re Seeing With the Implementation of Cryo-EM

Introducing cryo-EM to your laboratory can bring excitement to your team and a wealth of potential to your organization. However, it’s not a decision to make lightly, nor is it one you should make without consultation with strategic vendors actively working in the cryo-EM space, like RCH

The biggest challenge labs face is the sheer amount of data they need to be prepared to manage. The instruments capture very large datasets that require ample storage, access controls, bandwidth, and the ability to organize and use the data.

The instruments themselves bear a high price tag, and adding the appropriate infrastructure increases that cost. The tools also require ongoing maintenance.

There’s also the consideration of upskilling your team to opera te and troubleshoot the cryo-EM equipment. Given the newness of the technology, most in-house teams simply don’t have all of the required skills to manage the multiple variables, nor are they likely to have much (or any) experience working on cryo-EM projects.

Biologists are no strangers to undergoing training, so consider this learning curve just a part of professional development. However, combined with learning how to operate the equipment AND make sense of the data you collect, it’s clear that the learning curve is quite steep. It may take more training and testing than the average implementation project to feel confident in using the equipment.

For these reasons and more, engaging a partner like RCH that can support your firm from the inception of its cryo-EM implementation ensures critical missteps are circumvented which ultimately creates more sustainable and future-proof workflows, discovery and outcomes.With the challenges properly addressed from the start, the promises that cryo-EM holds are worth the extra time and effort it takes to implement it.

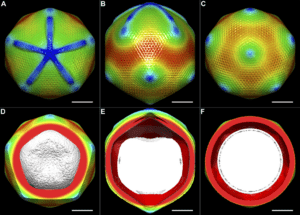

How to Create a Foundational Infrastructure for Cryo-EM Technology

As you consider your options for introducing Cryo-EM technology, one of your priorities should be to create an ecosystem in which cryo-EM can thrive in a cloud-first, compute forward approach. Setting the stage for success, and ensuring you are bringing the compute to the data from inception, can help you reap the most rewards and use your investment wisely.

Here are some of the top considerations for your infrastructure:

- Network Bandwidth

One early study of cryo-EM found that each microscope outputs about 500 GB of data per day. Higher bandwidth can help streamline data processing by increasing download speeds so that data can be more quickly reviewed and used. - Proximity to and Capacity of Your Data Center

Cryo-EM databases are becoming more numerous and growing in size and scope. The largest data set in the Electron Microscopy Public Image Archive (EMPIAR) is 12.4TB, while the median data set is about 2TB. Researchers expect these massive data sets to become the norm for cryo-EM, which means you need to ensure your data center is prepared to handle a growing load of data. This applies to both cloud-first organizations and those with hybrid data storage models. - Integration with High-Performance Computing

Integrating high-performance computing (HPC) with your cryo-EM environment ensures you can take advantage of the scope and depth of the data created. Scientists will be churning through massive piles of data and turning them into 3D models, which will take exceptional computing power. - Having the Right Tools in Place

To use cryo-EM effectively, you’ll need to complement your instruments with other tools and software. For example, CryoSPARC is the most common software that’s purpose-built for cryo-EM technology. It has configured and optimized the workflows specifically for research and drug discovery. - Availability and Level of Expertise

Because cryo-EM is still relatively new, organizations must decide how to gain the expertise they need to use it to its full potential. This could take several different forms, including hiring consultants, investing in internal knowledge development, and tapping into online resources.

How RCH Solutions Can Help You Prepare for Cryo-EM

Implementing cryo-EM is an extensive and costly process, but laboratories can mitigate these and other challenges with the right guidance. It starts with knowing your options and taking all costs and possibilities into account.

Cryo-EM is the new frontier in drug discovery, and RCH Solutions is here to help you remain on the cutting edge of it. We provide tactical and strategic support in developing a cryo-EM infrastructure that will help you generate a return on investment.

Contact us today to learn more.

Sources:

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7096719/

https://www.nature.com/articles/d41586-020-00341-9

https://www.gatan.com/techniques/cryo-em

https://www.chemistryworld.com/news/explainer-what-is-cryo-electron-microscopy/3008091.article

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6067001/

Does the Cloud Live up to Its Transformative Reputation?

Studied benefits of Cloud computing in the biotech and pharma fields.

Cloud computing has become one of the most common investments in the pharmaceutical and biotech sectors. If your research and development teams don’t have the processing power to keep up with the deluge of available data for drug discovery and other applications, you’ve likely looked into the feasibility of a digital transformation.

Real-world research reveals these examples that highlight the incredible effects of Cloud-based computing environments for start-up and growing biopharma companies.

Competitive Advantage

As more competitors move to the Cloud, adopting this agile approach saves your organization from lagging behind. Consider these statistics:

- According to a February 2022 report in Pharmaceutical Technology, keywords related to Cloud computing increased by 50% between the second and third quarters of 2021. What’s more, such mentions increased by nearly 150% over the five-year period from 2016 to 2021.

- An October 2021 McKinsey & Company report indicated that 16 of the top 20 pharmaceutical companies have referenced the Cloud in recent press releases.

- As far back as 2020, a PwC survey found that 60% of execs in pharma had either already invested in Cloud tech or had plans for this transition underway.

Accelerated Drug Discovery

In one example cited by McKinsey, Moderna’s first potential COVID-19 vaccine entered clinical trials just 42 days after virus sequencing. CEO Stéphane Bancel credited Cloud technology, that enables scalable and flexible access to droves of existing data and as Bancel put it, doesn’t require you “to reinvent anything,” for this unprecedented turnaround time.

Enhanced User Experience

Both employees and customers prefer to work with brands that show a certain level of digital fluency. In the survey by PwC cited above, 42% of health services and pharma leaders reported that better UX was the key priority for Cloud investment. Most participants – 91% – predicted that this level of patient engagement will improve individual ability to manage chronic disease that require medication.

Rapid Scaling Capabilities

Cloud computing platforms can be almost instantly scaled to fit the needs of expanding companies in pharma and biotech. Teams can rapidly increase the capacity of these systems to support new products and initiatives without the investment required to scale traditional IT frameworks. For example, the McKinsey study estimates that companies can reduce the expense associated with establishing a new geographic location by up to 50% by using a Cloud platform.

Are you ready to transform organizational efficiency by shifting your biopharmaceutical lab to a Cloud-based environment? Connect with RCH today to learn how we support our customers in the Cloud with tools that facilitate smart, effective design and implementation of an extendible, scalable Cloud platform customized for your organizational objectives.

References

https://www.mckinsey.com/industries/life-sciences/our-insights/the-case-for-Cloud-in-life-sciences

https://www.pharmaceutical-technology.com/dashboards/filings/Cloud-computing-gains-momentum-in-pharma-filings-with-a-50-increase-in-q3-2021/

https://www.pwc.com/us/en/services/consulting/fit-for-growth/Cloud-transformation/pharmaceutical-life-sciences.html

Attention R&D IT decision makers:

If you’re expecting different results in 2022 despite relying on the same IT vendors and rigid support model that didn’t quite get you to your goal last year, it may be time to hit pause on your plan.

At RCH, we’ve spent the past 30+ years paying close attention to what works — and what doesn’t—while providing specialty scientific computing and research IT support exclusively in the Life Sciences. We’ve put together this list of must-do best practices that you, and especially your external IT partner, should move to the center of your strategy to help you to take charge of your R&D IT roadmap.

And if your partners are not giving you this advice to get your project back track? Well, it may be time to find a new one.

1. Ground Your Plan in Reality

In the high-stakes and often-demanding environment of R&D IT, the tendency to move toward solutioning before fully exposing and diagnosing the full extent of the issue or opportunity is very common. However, this approach is not only ineffective, it’s also expensive. Only when your strategy and plan is created to account for where you are today — not where you’d like to be today — can you be confident that it will take you where you want to go. Otherwise, you’ll be taking two steps forward, and one (or more) step back the entire time.

2. Start with Good Data

Research IT professionals are often asked to support a wide range of data-related projects. But the reality is, scientists can’t use data to drive good insights, if they can’t find or make sense of the data in the first place. Implementing FAIR data practices should be the centerprise of any scientific computing strategy. Once you see the full scope of your data needs, only then can you deliver on high-value projects, such as analytics or visualization.

3. Make “Fit-For-Purpose” Your Mantra

Research is never a one size fits all process. Though variables may be consistent based on the parameters of your organization and what has worked well in the past, viewing each challenge as unique affords you the opportunity to leverage best-of-breed design patterns and technologies to answer your needs. Therefore, resist the urge to force a solution, even one that has worked well in other instances, into a framework if it’s not the optimal solution, and opt for a more strategic and tailored approach.

4. Be Clear On the Real Source of Risk

Risk exists in virtually all industries, but in a highly regulated environment, the concept of mitigating risk is ubiquitous, and for good reason. When the integrity of data or processes drives outcomes that can actually influence life or death, accuracy is not only everything, it’s the only thing. And so the tendency is to go with what you know. But ask yourself this: does your effort to minimize risk stifle innovation? In a business built on boundary-breaking innovation, mistaking static for “safe” can be costly. Identifying which projects, processes and/or workloads would be better managed by other, more specialized service providers may actually reduce risk by improving project outcomes.

Reaching Your R&D IT Goals in 2022: A Final Thought

Never substitute experience.

Often, the strategy that leads to many effective scientific and technical computing initiatives within an R&D IT framework differs from a traditional enterprise IT model. And that’s ok because, just as often, the goals do as well. That’s why it is so important to leverage the expertise of R&D IT professionals highly specialized and experienced in this niche space.

Experience takes time to develop. It’s not simply knowing what solutions work or don’t, but rather understanding the types of solutions or solution paths that are optimal for a particular goal, because, well—‘been there done that. It’s having the ability to project potential outcomes, in order to influence priorities and workflows. And ultimately, it’s knowing how to find the best design patterns.

It’s this level of specialization — focused expertise combined with real, hands-on experience — that can make all the difference in your ability to realize your outcome.

And if you’re still on the fence about that, just take a look at some of these case studies to see how it’s working for others.

Part 2: How to Tell if Your Computing Partner is Actually Adding Value to Your Research Process – Bridging the Gap Between Science and ITPart Two in a Five-Part Series for Life Sciences Researchers and IT Professionals

As you continue to evaluate your strategy for 2022 and beyond, it’s important to ensure all facets of your compute environment are optimized— including the partners you hire to support it.

Sometimes companies settle for working with partners that are just “good enough,” but in today’s competitive environment, that type of thinking can break you. What you really need to move the needle is a scientific computing partner who understands both Science and IT.

In part two of this five-part blog series on what you should be measuring your current providers against, we’ll examine how to tell if your external IT partner has the chops to meet the high demands of science, while balancing the needs of IT. If you haven’t read our first post, Evaluation Consideration #1: Life Science Specialization and Mastery, you can jump over there, first.

Evaluation Consideration #2: Bridging the Gap Between Science and IT

While there are a vast number of IT partners available, it’s important to find someone that has a deep understanding of the scientific industry and community. It can be invaluable to work with a specialized IT group, considering being an expert in one or the other is not enough. The computing consultant that works with clients in varying industries may not have the best combination of knowledge and experience to drive the results you’re looking for.

Your computing partner should have a vast understanding of how your research drives value for your stakeholders. Their ability to leverage opportunities and implement IT infrastructure that meet scientific goals, is vital. Therefore, as stated in consideration #1: Life Science Specialization and Mastery, it’s vital that your IT partner have significant IT experience.

This is an evaluation metric best captured during strategy meetings with your scientific computing lead. Take a moment to consider the IT infrastructure options that are presented to you. Do they use your existing scientific infrastructure as a foundation? Do they require IT skills that your research team has?

These are important considerations because you may end up spending far more than necessary on IT infrastructure that goes underutilized. This will make it difficult for your life science research firm to work competitively towards new discoveries.

The Opportunity Cost of Working with the Wrong Partner is High

Overspending on underutilized IT infrastructure draws valuable IT resources away from critical research initiatives. Missing opportunities to deploy scientific computing solutions in response to scientific needs negatively impacts research outcomes.

Determining if your scientific computing partner is up to the task requires taking a closer look at the quality of expertise you receive. Utilize your strategy meetings to gain insight into the experience and capabilities of your current partners, and pay close attention to Evaluation Consideration #2: Bridging the Gap Between Science and IT. Come back next week to read more about our next critical consideration in your computing partnership, having a High Level of Adaptability.

Part 1: How to Tell if Your Computing Partner is Actually Adding Value to Your Research ProcessA Five-Part Series for Life Sciences Researchers and IT Professionals

The New Year is upon us and for most, that’s a time to reaffirm organizational goals and priorities, then develop a roadmap to achieve them. For many enterprise and R&D IT teams, that includes working with external consultants and providers of specialized IT and scientific computing services.

But much has changed in the last year, and more change is coming in the next 12 months. Choosing the right partner is essential to the success of your research and, in the business where speed and performance are critical to your objectives, you don’t want to be the last to know when your partner isn’t working out quite as well as you had planned (and hoped).

But what should you look for in a scientific computing partner?

This blog series will outline five qualities that are essential to consider … and what you should be measuring your current providers against throughout the year to determine if they’re actually adding value to your research and processes.

Evaluation Consideration #1: Life Science Specialization and Mastery

There are many different types of scientific computing consultants and many different types of organizations that rely on them. Life science researchers regularly perform incredibly demanding research tasks and need computing infrastructure that can support those needs in a flexible, scalable way.

A scientific computing consultant that works with a large number of clients in varied industries may not have the unique combination of knowledge and experience necessary to drive best-in-class results in the life sciences.

Managing IT infrastructure for a commercial enterprise is very different from managing IT infrastructure for a life science research organization. Your computing partner should be able to provide valuable, highly specialized guidance that caters to research needs – not generic recommendations for technologies or workflows that are “good enough” for anyone to use.

In order to do this, your computing partner must be able to develop a coherent IT strategy for supporting research goals. Critically, partners should also understand what it takes to execute that strategy, and connect you with the resources you need to see it through.

Today’s Researchers Can’t Settle for “Good Enough”

In the past, the process of scientific discovery left a great deal of room for trial and error. In most cases, there was no alternative but to follow the intuition of scientific leaders, who could spend their entire career focused on solving a single scientific problem.

Today’s research organizations operate in a different environment. The wealth of scientific computing resources and the wide availability of emerging technologies like artificial intelligence (AI) and machine learning (ML) enable brand-new possibilities for scientific discovery.

Scientific research is increasingly becoming a multi-disciplinary process that requires researchers and data scientists to work together in new ways. Choosing the right scientific partner can unlock value for research firms and reduce time-to-discovery significantly.

Best-in-class scientific computing partnerships enable researchers to:

- Predict the most promising paths to scientific discovery and focus research on the avenues most likely to lead to positive outcomes.

- Perform scientific computing on scalable, cloud-enabled infrastructure without overpaying for services they don’t use.

- Automate time-consuming research tasks and dedicate more time and resources to high-impact, strategic initiatives.

- Maintain compliance with local and national regulations without having to compromise on research goals to do so.

If your scientific computing partner is one step ahead of the competition, these capabilities will enable your researchers to make new discoveries faster and more efficiently than ever before.

But finding out whether your scientific computing partner is up to the task requires taking a closer look at the quality of expertise you receive. Pay close attention to Evaluation Consideration #1: Life Science Specialization and Mastery and come back next week to read more about our next critical consideration in your computing partnership, the Ability to Bridge the Gap Between Science and IT.

Why You Need an R Expert on Your Team

R enables researchers to leverage reproducible data science environments.

Life science research increasingly depends on robust data science and statistical analysis to generate insight. Today’s discoveries do not owe their existence to single “eureka” moments but the steady analysis of experimental data in reproducible environments over time.

The shift towards data-driven research models depends on new ways to gather and analyze data, particularly in very large datasets. Often, researchers don’t know beforehand whether those datasets will be structured or unstructured or what kind of statistical analysis they need to perform in order to reach research goals.

R has become one of the most popular programming languages in the world of data science because it answers these needs. It provides a clear framework for handling and interpreting large amounts of data. As a result, life science research teams are increasingly investing in R expertise in order to meet ambitious research goals.

How R Supports Life Science Research and Development

R is a programming language and environment designed for statistical computing. It’s often compared to Python because the two share several high-profile characteristics. They are both open-source programming languages that excel at data analysis.

The key difference is that Python is a general-purpose language. R was designed specifically and exclusively for data science applications. It offers researchers a complete ecosystem for data analysis and comes with an impressive variety of packages and libraries built for this purpose. Python’s popularity relies on it being relatively straightforward and easy to learn. Mastering R is much more challenging but offers far better solutions for data visualization and statistical analysis. R has earned its place as one of the best languages for scientific computing because it is interpreted, vector-based, and statistical:

- As an interpreted language, R runs without the need for a secondary compiler. Researchers can run code directly, which makes it faster and easier to interpret data using R.

- As a vector language, R allows anyone to add functions to a single vector without inserting a loop. This makes R faster and more powerful than non-vector languages.

- As a statistical language, R offers a wide range of data science and visualization libraries ideal for biology, genetics, and other scientific applications.

While the concept behind R is simple enough for some users to get results by learning on the fly, many of its most valuable functions are also its most complex. Life science research teams that employ R experts are well-positioned to address major pain points associated with using R while maximizing the research benefits it provides.

Challenges Life Science Research Teams Face When Implementing R

A large number of life science research teams already use R to some degree. However, fully optimized R implementation is rare in the life science industry. Many teams face steep challenges when obtaining data-driven research results using R:

- Maintaining Multiple Versions of R Packages Can Be Complex

Reproducibility is the defining component of scientific research and software development. Source code control systems make it easy for developers to track and manage different versions of their software, fix bugs, and add new features. However, distributed versioning is much more challenging when third-party libraries and components are involved.

Any R application or script will draw from R’s rich package ecosystem. These packages do not always follow any formal management system. Some come with extensive documentation, and others simply don’t. As developers update their R packages, they may inadvertently break dependencies that certain users rely on. Researchers who try to reproduce results using updated packages may get inaccurate outcomes.

Several high-profile R developers have engineered solutions to this problem. Rstudio’s Packrat is a dependency management system for R that lets users reproduce and isolate environments, allowing for easy version control and collaboration between users.

Installing a dependency management system like Packrat can help life science researchers improve their ability to manage R package versions and ensure reproducibility across multiple environments. Life science research teams that employ R experts can make use of this and many other management tools that guarantee smooth, uninterrupted data science workflows.

- Managing and Administrating R Environments Can Be Difficult

There is often a tradeoff between the amount of time spent setting up an R environment and its overall reproducibility. It’s relatively easy to create a programming environment optimized for a particular research task in R with minimal upfront setup time. However, that environment may not end up being easily manageable or reproducible as a result.

It is possible for developers to go back and improve the reproducibility of an ad-hoc project after the fact. This is a common part of the R workflow in many life science research organizations and a critical part of production analysis. However, it’s a suboptimal use of research time and resources that could be better spent on generating new discoveries and insights.

Optimizing the time users spend creating R environments requires considering the eventual reproducibility needs of each environment on a case-by-case basis:

- An ad-hoc exploration may not need any upfront setup since reproduction is unlikely.

- If an exploration begins to stabilize, users can establish a minimally reproducible environment using the session_info utility. It will still take some effort for a future user to rebuild the dependency tree from here.

- For environments that are likely candidates for reproduction, bringing in a dependency management solution like Packrat from the very beginning ensures a high degree of reproducibility.

- For maximum reproducibility, configuring and deploying containers using a solution like Docker guarantees all dependencies are tracked and saved from the start. This requires a significant amount of upfront setup time but ensures a perfectly reproducible, collaboration-friendly environment in R.

Identifying the degree of reproducibility each R environment should have requires a great degree of experience working within R’s framework. Expert scientific computing consultants can play a vital role in helping researchers identify the optimal solution for establishing R environments.

- Some Packages Are Complicated and Time-Consuming to Install

R packages are getting larger and more complex, which significantly impacts installation time. Many research teams put considerable effort into minimizing the amount of time and effort spent on installing new R packages.

This can become a major pain point for organizations that rely on continuous integration (CI) strategies like Travis or GitLab-CI. The longer it takes for you to get feedback on your CI strategy, the slower your overall development process runs as a result. Optimized CI pipelines can help researchers spend less time waiting for packages to install and more time doing research.

Combined with version management problems, package installation issues can significantly drag down productivity. Researchers may need to install and test multiple different versions of the same package before arriving at the expected result. Even if a single installation takes ten minutes, that time quickly adds up.

There are several ways to optimize R package installation processes. Research organizations that frequently install packages directly from source code may be able to use a cache utility to reduce compiling time. Advanced versioning and package management solutions can reduce package installation times even further.

- Troubleshooting R Takes Up Valuable Research Time

While R is simple enough for scientific personnel to learn and use quickly, advanced scientific use cases can become incredibly complex. When this happens, the likelihood of generating errors is high. Troubleshooting errors in R can be a difficult and time-consuming task and is one of the most easily preventable pain points that come with using R.

Scientific research teams that choose to contract scientific computing specialists with experience in R can bypass many of these errors. Having an R expert on board and ready to answer your questions can mean the difference between spending hours resolving a frustrating error code and simply establishing a successful workflow from the start.

R has a dynamic and highly active community, but complex life science research errors may be well outside the scope of R troubleshooting. In environments with strict compliance and cybersecurity rules in place, you may not be able to simply post your session_info data on a public forum and ask for help.

Life science research organizations need to employ R experts to help solve difficult problems, optimize data science workflows, and improve research outcomes. Reducing the amount of time researchers spend attempting to resolve error codes is key to maximizing their scientific output.

RCH Solutions Provides Central Management for Scientific Workflows in R

Life science research firms that rely on scientific computing partners like RCH Solutions can free up valuable research resources while gaining access to R expertise they would not otherwise have. A specialized team of scientific computing experts with experience using R can help life science teams alleviate the pain points described above.

Life science researchers bring a wide range of incredibly valuable scientific expertise to their organizations. This expertise may be grounded in biology, genetics, chemistry, or many other disciplines, but it does not necessarily predict a great deal of experience in performing data science in R. Scientists can do research without a great deal of R knowledge – if they have a reliable scientific computing partner.

RCH Solutions allows life science researchers to centrally manage R packages and libraries. This enables research workflows to make efficient use of data science techniques without costing valuable researcher time or resources.

Without central management, researchers are likely to spend a great deal of time trying to install redundant packages. Having multiple users spend time installing large, complex R packages on the same devices is an inefficient use of valuable research resources. Central management prevents users from having to reinvent the wheel every time they want to create a new environment in R.

Optimize Your Life Science Research Workflow with RCH Solutions

Contracting a scientific computing partner like RCH Solutions means your life science research workflow will always adhere to the latest and most efficient data practices for working in R. Centralized management of R packages and libraries ensures the right infrastructure and tools are in place when researchers need to create R environments and run data analyses.

Find out how RCH Solutions can help you build and implement the appropriate management solution for your life science research applications and optimize deployments in R. We can aid you in ensuring reproducibility in data science applications. Talk to our specialists about your data visualization and analytics needs today.

Challenges and Solutions for Data Management in the Life Science Industry

Bio-IT Teams Must Focus on Five Major Areas in Order to Improve Efficiency and Outcomes

Life Science organizations need to collect, maintain, and analyze a large amount of data in order to achieve research outcomes. The need to develop efficient, compliant data management solutions is growing throughout the Life Science industry, but Bio-IT leaders face diverse challenges to optimization.

These challenges are increasingly becoming obstacles to Life Science teams, where data accessibility is crucial for gaining analytic insight. We’ve identified five main areas where data management challenges are holding these teams back from developing life-saving drugs and treatments.

Five Data Management Challenges for Life Science Firms

Many of the popular applications that Life Science organizations use to manage regulated data are not designed specifically for the Life Science industry. This is one of the main reasons why Life Science teams are facing data management and compliance challenges. Many of these challenges stem from the implementation of technologies not well-suited to meet the demands of science.

Here, we’ve identified five areas where improvements in data management can help drive efficiency and reliability.

1. Manual Compliance Processes

Some Life Sciences teams and their Bio-IT partners are dedicated to leveraging software to automate tedious compliance-related tasks. These include creating audit trails, monitoring for personally identifiable information, and classifying large volumes of documents and data in ways that keep pace with the internal speed of science.

However, many Life Sciences firms remain outside of this trend towards compliance automation. Instead, they perform compliance operations manually, which creates friction when collaborating with partners and drags down the team’s ability to meet regulatory scrutiny.

Automation can become a key value-generating asset in the Life Science development process. When properly implemented and subjected to a coherent, purpose-built data governance structure, it improves data accessibility without sacrificing quality, security, or retention.

2. Data Security and Integrity

The Life Science industry needs to be able to protect electronic information from unauthorized access. At the same time, certain data must be available to authorized third parties when needed. Balancing these two crucial demands is an ongoing challenge for Life Science and Bio-IT teams.

When data is scattered across multiple repositories and management has little visibility into the data lifecycle, striking that key balance becomes difficult. Determining who should have access to data and how permission to that data should be assigned takes on new levels of complexity as the organization grows.

Life Science organizations need to implement robust security frameworks that minimize the exposure of sensitive data to unauthorized users. This requires core security services that include continuous user analysis, threat intelligence, and vulnerability assessments, on top of a Master Data Management (MDM) based data infrastructure that enables secure encryption and permissioning of sensitive data, including intellectual properties.

3. Scalable, FAIR Data Principles

Life Science organizations increasingly operate like big data enterprises. They generate large amounts of data from multiple sources and use emerging technologies like artificial intelligence to analyze that data. Where an enterprise may source its data from customers, applications, and third-party systems, Life Science teams get theirs from clinical studies, lab equipment, and drug development experiments.

The challenge that most Life Science organizations face is the management of this data in organizational silos. This impacts the team’s ability to access, analyze, and categorize the data appropriately. It also makes reproducing experimental results much more difficult and time-consuming than it needs to be.

The solution to this challenge involves implementing FAIR data principles in a secure, scalable way. The FAIR data management system relies on four main characteristics:

Findability. In order to be useful, data must be findable. This means it must be indexed according to terms that IT teams, scientists, auditors, and other stakeholders are likely to search for. It may also mean implementing a Master Data Management (MDM) or metadata-based solution for managing high-volume data.

Accessibility. It’s not enough to simply find data. Authorized users must also be able to access it, and easily. When thinking about accessibility—while clearly related to security and compliance, including proper provisioning, permissions, and authentication—ease of access and speed can be a difference-maker, which leads to our next point.

Interoperability. When data is formatted in multiple different ways, it falls on users to navigate complex workarounds to derive value from it. If certain users don’t have the technical skills to immediately use data, they will have to wait for the appropriate expertise from a Bio-IT team member, which will drag down overall productivity.

Reusability. Reproducibility is a serious and growing concern among Life Science professionals. Data reusability plays an important role in ensuring experimental insights can be reproduced by independent teams around the world. This can be achieved through containerization technologies that establish a fixed environment for experimental data.

4. Data Management Solutions

The way your Life Sciences team stores and shares data is an integral component of your organization’s overall productivity and flexibility. Organizational silos create bottlenecks that become obstacles to scientific advancement, while robust, accessible data storage platforms enable on-demand analysis that improves time-to-value for various applications.

The three major categories of storage solutions are Cloud, on-premises, and hybrid systems. Each of these presents a unique set of advantages and disadvantages, which serve specific organizational goals based on existing infrastructure and support. Organizations should approach this decision with their unique structure and goals in mind.

Life Science firms that implement MDM strategy are able to take important steps towards storing their data while improving security and compliance. MDM provides a single reference point for Life Science data, as well as a framework for enacting meaningful cybersecurity policies that prevent unauthorized access while encouraging secure collaboration.

MDM solutions exist as Cloud-based software-as-a-service licenses, on-premises hardware, and hybrid deployments. Biopharma executives and scientists will need to choose an implementation approach that fits within their projected scope and budget for driving transformational data management in the organization.

Without an MDM strategy in place, Bio-IT teams must expend a great deal of time and effort to organize data effectively. This can be done through a data fabric-based approach, but only if the organization is willing to leverage more resources towards developing a robust universal IT framework.

5. Monetization

Many Life Science teams don’t adequately monetize data due to compliance and quality control concerns. This is especially true of Life Science teams that still use paper-based quality management systems, as they cannot easily identify the data that they have – much less the value of the insights and analytics it makes possible.

This becomes an even greater challenge when data is scattered throughout multiple repositories, and Bio-IT teams have little visibility into the data lifecycle. There is no easy method to collect these data for monetization or engage potential partners towards commercializing data in a compliant way.

Life Science organizations can monetize data through a wide range of potential partnerships. Organizations to which you may be able to offer high-quality data include:

- Healthcare providers and their partners

- Academic and research institutes

- Health insurers and payer intermediaries

- Patient engagement and solution providers

- Other pharmaceutical research organizations

- Medical device manufacturers and suppliers

In order to do this, you will have to assess the value of your data and provide an accurate estimate of the volume of data you can provide. As with any commercial good, you will need to demonstrate the value of the data you plan on selling and ensure the transaction falls within the regulatory framework of the jurisdiction you do business in.

Overcome These Challenges Through Digital Transformation

Life Science teams who choose the right vendor for digitizing compliance processes are able to overcome these barriers to implementation. Vendors who specialize in Life Sciences can develop compliance-ready solutions designed to meet the incredibly unique needs of science, making fast, efficient transformation a possibility.

RCH Solutions can help teams like yours capitalize on the data your Life Science team generates and give you the competitive advantage you need to make valuable discoveries. Rely on our help to streamline workflows, secure sensitive data, and improve Life Sciences outcomes.

RCH Solutions is a global provider of computational science expertise, helping Life Sciences and Healthcare firms of all sizes clear the path to discovery for nearly 30 years. If you’re interested in learning how RCH can support your goals, get in touch with us here.