HPC Migration in the Cloud: Getting it Right from the Start

High-Performance Computing (HPC) has long been an incredible accelerant in the race to discover and develop novel drugs and therapies for both new and well-known diseases. And a HPC migration to the Cloud might be your next step to maintain or grow your organization’s competitive advantage.

Whether it’s a full HPC migration to the Cloud or a uniquely architected hybrid approach, evolving your HPC ecosystem to the Cloud brings critical advantages and benefits including:

- Flexibility and scalability

- Optimized costs

- Enhanced security

- Compliance

- Backup, recovery, and failover

- Simplified management and monitoring

And with incredibly careful planning, strategic design, effective implementation and with the right support, the capabilities and accelerated outcomes of migrating your HPC systems to the Cloud can lead to truly accelerated breakthroughs and drug discovery.

But with this level of promise and performance, comes challenges and caveats that require strategic consideration throughout all phases of your supercomputing and HPC development, migration and management.

So, before you commence your HPC Migration from on-premise data centers or traditional HPC clusters to the Cloud, here are some key considerations to keep in mind throughout your planning phase.

1. Assess & Understand Your Legacy HPC Environment

Building a comprehensive migration plan and strategy from inception is necessary for optimization and sustainable outcomes. A proper assessment includes an evaluation of the current state of your legacy hardware, software, and the data resources available for use, as well as the system’s capabilities, reliability, scalability, and flexibility, prioritizing security and maintenance of the system.

Gaining a deep and thorough understanding of your current infrastructure and computing environment will help identify technical constraints or bottlenecks that exist, and inform the order that might be necessary for migration. And that level of insight can streamline and circumvent major, arguably avoidable, hurdles that your organization might face.

2. Determine the Right Cloud Provider and Tooling

Determining the right HPC Cloud provider for your organization can be a complex process, but an irrefutable critical one. In fact, your entire computing environment depends on it. It involves researching the available options, comparing features and services, and evaluating cost, reputation and performance.

Amazon Web Service, Microsoft Azure, and Google Cloud – to name just the three biggest – offer storage and Cloud computing services that drive accelerated innovation for companies by offering fast networking and virtually unlimited infrastructure to store and manage massive data sets the computing power required to analyze it. Ultimately, many vendors offer different types of cloud infrastructure that run large, complex simulations and deep learning workloads in the cloud, and it is important to first select the one that best meets the needs of your unique HPC workloads between public cloud, private cloud, or hybrid cloud infrastructure.

3. Plan for the Right Design & Deployment

In order to effectively plan for a HPC Migration in the Cloud, it is important to clearly define the objectives, determine the requirements and constraints, identify the expected outcomes, and a timeline for the project.

From a more technical perspective, it is important to consider the application’s specific requirements and the inherent capabilities including storage requirements, memory capacity, and other components that may be needed to run the application. If a workload requires a particular operating system, for example, then it should be chosen accordingly.

Finally, it is important to understand the networking and security requirements of the application before working through the design, and definitely the deployment phase, of your HPC Migration.

The HPC Migration Journey Begins Here…

By properly considering all of these factors, it is possible to effectively plan for your organization’s HPC migration and its ability to leverage the power of supercomputing in drug discovery.

Assuming your plan is comprehensive, effective and sustainable, implementing your HPC migration plan is ultimately still a massive undertaking, particularly for research IT teams likely already overstretched or for an existing Bio-IT vendor lacking specialized knowledge and skills.

So, if your team is ready to take the leap and begin your HPC migration, get in touch with our team today.

The Next Phase of Your HPC Migration in the Cloud

A HPC migration to the Cloud can be an incredibly complex process, but with strategic planning and design, effective implementation and with the right support, your team will be well on their way to sustainable success. Click below and get in touch with our team to learn more about our comprehensive HPC Migration services that support all phases of your HPC migration journey, regardless of which stage you are in.

Implementation and interoperability are key to achieving the benefits of AI in pharma.

Artificial intelligence is showing great promise in streamlining the development of new pharmaceuticals. In fact, a recent LinkedIn poll revealed that AI and emerging tech was the leading opportunity area identified for pharma R&D. But it’s not a silver bullet—implementing AI technologies comes with a range of complexities, especially in aligning them with the existing challenges of drug development. For AI-aided drug discovery to work, pharma companies need the right solutions, support, and expertise to gain the most benefit.

The Promising Future of AI-Aided Drug Discovery

Drug discovery is an incredibly complex, laborious, costly, and lengthy process. Traditionally, it requires extensive manual testing and documentation. On average, a new treatment costs $985 million to develop, with a high trial failure rate being a leading cause of sky-high costs. In fact, only about one in eight treatments that enter the clinical trial phase make it to the market, while the remaining seven are never developed.

AI has the ability to analyze significant volumes of data, predict outcomes, and uncover data similarities to help drive down costs. Making connections between data points in real-time boosts efficiency and reduces the time to discovery—that is, when AI technologies are implemented properly.

AI Challenges That Impact Drug Discovery

The advancement of AI and machine learning is showing great potential in combating some, if not all, of the challenges of traditional drug discovery. But AI-aided drug discovery also invites new challenges of its own.

One such challenge is the potential lack of data to properly feed AI and machine learning technologies. Typically, AI relies on large datasets from which to “learn.” But with unique diseases and rare conditions, there simply isn’t a lot of data for these technologies to ingest. What’s more, these tools typically need years of historical data to identify trends and patterns. Given the frequency of mergers and acquisitions in pharma, original data sources may be unavailable and, therefore, unusable.

McKinsey notes that one of the greatest challenges lies in delivering value at scale. AI should be fully integrated into the company’s scientific processes to gain the full benefit of AI-driven insights. AI-enabled discovery approaches (including via partnerships) are often kept at arm’s length from internal day-to-day R&D. They proceed as an experiment and are not anchored in a biopharma companies’ scientific and operational processes to achieve impact at scale.

Additionally, achieving interoperability limits the effectiveness of AI in drug research. Investment in digitized drug discovery capabilities and data sets within internal R&D teams is minimal. Companies frequently leverage partner platforms and enrich their IP rather than build biopharma’s end-to-end tech stack and capabilities. However, data needs to break out of silos and communicate with each other to contextualize the outputs. This is easier said than done when data comes from multiple sources in different structures and varying levels of reliability.

A part of this bigger challenge is the lack of data standardization. Using AI in drug discovery is still very new. The industry as a whole has not defined what constitutes a good data set, nor is there an agreed-upon set of data points that should be included in R&D processes. This opens the door for data bias, especially as some groups of the population have historically been omitted from medical datasets, which could lead to misdiagnoses or unreliable outcomes.

A lack of standardization also invites the potential for regulatory hurdles. Without a standardized way to structure, capture, and store data, pharma companies could be at risk of privacy concerns or non-compliance. The pharma industry is heavily regulated and requires careful documentation and disclosures at every stage of drug development. Adding the AI element to the process will introduce new regulatory considerations to ensure safety, privacy, and thoroughness.

How to Gain Support for AI-Aided Drug Discovery

AI is the future of daily human living—from how we travel, to what we buy, to the pharmaceuticals we take to live a higher quality of life. But in Life Sciences, AI will not replace Research Scientists, but Research Scientists that use AI will replace those that don’t. And Biotechs and Pharma companies conducting drug discovery and development need an experienced partner that understands that, for the effective implementation of AI technologies that drive results.

If your organization is looking to incorporate AI to boost your drug discovery goals, a strategic partner will help you navigate and circumvent the unavoidable hurdles and pitfalls from inception. At RCH Solutions, our Bio-IT consultants in Life Sciences understand the intricacies of the pharma industry and how they relate to the use of new technology. Implement new solutions in an intentional manner and give them staying power to achieve the greatest possible outcomes.

Download the Emerging Technologies eBook to learn more about the future of AI-aided drug discovery, and get in touch with our team for a consultation.

Sources:

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7054832/

https://roboticsbiz.com/ai-in-drug-discovery-benefits-drawback-and-challenges/

https://arxiv.org/abs/2212.08104

https://www.weforum.org/agenda/2022/10/open-source-data-science-bias-more-ethical-ai-technology/

Because “good” is no longer good enough, see what to look for, and what to avoid, in a specialized Bio-IT partner.

Gone are the days where selecting a strategic Bio-IT partner for your emerging biotech or pharma was a linear or general IT challenge. Where good was good enough because business models were less complex and systems were standardized and simple.

Today, opportunities and discoveries that can lead to significant breakthroughs now emerge faster than ever. And your scientists need sustainable and efficient computing solutions that enable them to focus on science, at the speed and efficiency that’s necessary in today’s world of medical innovation. The value your Biot-IT partner adds can be a missing link to unlocking and accelerating your organization’s discovery and development goals … or the weight that’s holding you back.

Read on to learn 5 important qualities that you should not only expect but demand from your Bio-IT partner. As well as the red flags that may signal you’re working with the wrong one.

Subject Matter Expertise & Life Science Mastery vs. General IT Expertise & Experience

Your organization needs a Bio-IT partner with the ability to bridge the gap between science and IT, or Sci-T as we call it, and this is only possible when their unique specialization in the life sciences is backed by their proven subject matter expertise in the field. This means your partner should be up-to-date on the latest technologies but, more importantly, have demonstrable knowledge about your business’ unique needs in the landscape in which it’s operating. And be able to provide working recommendations and solutions to get you where you want—and need —to be. That is what separates the IT generalists from subject matter and life science experts.

Vendor Agnostic vs. Vendor Follower

Technologies and programs that suit your biotech or pharma’s evolving needs are different from organization to organization. Your firm has a highly unique position and individualized objectives that require solutions that are just as bespoke —and we get that. But unfortunately, many Bio-IT partners still build their recommendation based on existing and mutually beneficial supplier relationships that they prioritize, alongside their margins, even when significantly better solutions might be available. And that’s why seeking a strategic partner that is vendor agnostic is so critical. The right Bio-IT partner will look out for your best interest and focus on solutions that propel you to your desired outcomes most efficiently and effectively, ultimately accelerating your discovery.

Collaborative and Thought Partner vs. Order Taker

Anyone can be an order taker. But your organization doesn’t always know what they want to—or should—order. And that is where a collaborative and strategic partner comes in, and can be the difference maker. Your strategic Bio-IT partner should spark creativity, drive innovation, and ultimately cultivate business success. They’ll dive deep into your organizational needs to intimately understand what will propel you to your desired outcomes, and recommend agnostic industry-leading solutions that will get you there. Most importantly, they work on effectively implementing them to streamline systems and processes to create a foundation for sustainability and scalability, which is where the game-changing transformation occurs for your organization.

Individualized and Inventive vs. One-Size-Fits-All

A strategic Bio-ITpartner needs to understand that success in the life sciences depends on being able to collect, correlate and leverage data to uphold a competitive advantage. But no two organizations are the same, share the same objectives, or have the same considerations and dependencies for a compute environment.

Rather than doing more of the same, your Bio-IT partner should view your organization through your individualized lens and seek fit-for-purpose paths that align to your unique challenges and needs. And because they understand both the business and technology landscapes, they should ask probing questions, and have the right expertise to push beyond the surface, and introduce novel solutions to legacy issues, routinely. The result is a service that helps you accelerate the development of your next scientific breakthrough.

Dynamic and Modern Business Acumen vs. Centralized Business Processes

With the pandemic came new business and work processes and procedures, and employees and offices are no longer centralized like they once were. Or maybe yours never was. Either way, the right Bio-IT partner needs to understand the unique technical requirements and the volume of data and information that is now exchanged between employees, partners, and customers globally, and at once. And solutions need to work the same, if not better, than if teams were sitting alongside each other in a physical office. So, the right strategic partner must have modern business acumen and the dynamic expertise that’s necessary to build and effectively implement solutions that enable teams to work effectively and efficiently from anywhere in the world.

Your Bio-IT Partner Can Make or Break Success

We’ll say it again – good is not good enough. And frankly, just good enough is not up to par, either. It takes a uniquely qualified, seasoned and modern Bio-IT partner that understands that the success—and the failure—of a life science company hinges on its ability to innovate, and that your infrastructure is the foundation upon which that ability, and your ability to scale, sits. They must understand which types of solutions work best for each of your business pain points and opportunities, including those that still might be undiscovered. But most importantly, valuable partners can drive and effectively implement necessary changes that enable and position life science companies to reach and surpass their discovery goals. And that’s what it takes in today’s fast-paced world of medical innovation.

So, if you feel like your Bio-IT partner might be underdelivering in any of our top 5 areas, then it might be time to find one that can—and will—truly help you leverage scientific computing innovation to reach your goals.

My oldest daughter, Sophie, completed her first New York City Marathon this year. I’m proud of her for so many reasons and watching her conquer 26.2 miles is a memory I’ll never forget.

Since attending the race, I’ve been thinking a lot about the endurance, determination, and, frankly, the planning that’s required when running a marathon. There are many parallels with running a business to scale.

Often, organizations in growth mode make the mistake of being so focused on the finish line, they lose sight of the many important checkpoints along the way. In doing so their determination may remain strong, but their endurance is likely to suffer.

After nearly 30 years in leadership, ask me how I know ….

Looking Toward the Future One Checkpoint at a Time

Today, reflecting on the race we’ve run here at RCH in 2022, I’m so immensely proud of the distance we’ve covered. Seeing our revenue increase nearly 30% year over year has been exceedingly rewarding. More exciting, however, has been watching our team grow and respond in the face of increasing demand from our customers. In 2022 alone, RCH’s workforce grew by more than 70%. And the best part? We’re not slowing down; not only are we continuously developing and nurturing the growth of our existing team, we’re also actively recruiting for additional talent now and into the New Year.

Much like the marathon runner, we had an opportunity to test ourselves. Working within a biotech and pharma industry that continues to evolve, facing consolidation, budget cuts, exploding innovation, resource constraints, and more, we encountered many important milestones on our path. And importantly, gave ourselves the opportunity to adjust our plan along the way.

And here’s what we know:

Large Pharmas and Emerging Life Sciences Need On-Going R&D IT Run Services

For years, RCH has been the go-to bio-IT partner for R&D teams within many of the world’s top pharmas, providing our specialized Sci-T Managed Services offering based on our experience and understanding of where science and IT intersect. And we’ve been able to because organizations of that scale have a very real need for ongoing, “routine” science-focused IT operational support. The R&D flavor of “keeping the lights on” for their powerful and complex scientific computing environments. We’ve earned the right to be their first call because, frankly, we’re damn good at helping R&D teams and their IT counterparts move science forward without disruption or delay. It’s a role we’re comfortable in, and a role we like.

But there was also a need to shift.

Emerging Start-Ups Also Need Foundational and Project-Based Consulting & Execution

There’s never been a better time to be an emerging biopharma. The opportunities are monumental and the science is some of the best and most exciting I’ve ever seen. But not without challenge.

With limited Bio-IT resources, scientist-heavy teams, and often a compute ecosystem that’s not optimized for performance and scale, we’ve found these firms to have a pressing need for advice before execution. The need for a trusted advisor to help them learn what they don’t even know could be possible. To turn their compute environment and tech stack into a true asset that will not only help them achieve their scientific goals, but also their business goals as they eye the future and what it may hold.

However, although they’re racing toward discovery, development and products, with speed as the top goal, they still need to hit their checkpoints.

This year, RCH has been able to expand beyond our reputation as the “people who just get it done” (though we always knew we were capable of doing much, much more) with ease. Leaning on our experience serving some of the biggest and best teams in the biz, we built out a comprehensive menu of outcome-based Professional Services to meet the needs of quick moving teams like I just described.

Some of those services include assessment and roadmap development, Cloud infrastructure architecture and execution, and a range of what we call “accelerators,” which help teams reach specific goals related to:

- Data and workflow management

- Platform Dev/Ops and automation

- HPC and computing at scale

- AI integration and advancement

- Front-end development and visualization

- Instrumentation and lab support, and

- Specialty scientific application tuning and optimization, among others

And we haven’t stopped there. Several of our recent and forthcoming service engagements include support that goes well beyond R&D, focusing on Clinical and Regulatory Services. It’s a space we’ve been eyeing for a while now, and the time is now right to officially push in to this new territory. More to come on that soon ….

Why does this matter? Well, because stopping to reassess and expand how we deliver services—it’s what propelled RCH across one finish line and into the next race. And we did it with many wonderful customers, partners, and supporters along the way.

So, as we prepare to close one year and look toward the next, I offer you the following:

Pushing forward too quickly can, paradoxically, be the formula for slowed discovery, development and clinical progress which can lead to stalled outcomes and/or costly missteps. Though innovation requires speed and usually feels like a sprint, scale sometimes warrants a slower burn.

When assessing your path to the best outcomes, consider the marathon runner. Determine a winning pace, understand what must be done to sustain it, and make sure you’re solutioned for long-term success.

And remember, the best competitor is not always the one who crosses every finish line first—but then again, I may be a little biased.

📷: Sophia Riener, NYC Marathon – November 2022

Cryo-Em brings a wealth of potential to drug research. But first, you’ll need to build an infrastructure to support large-scale data movement.

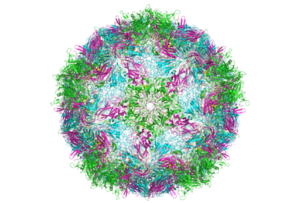

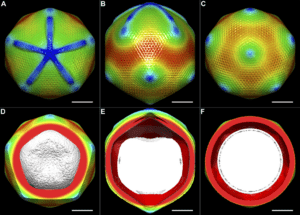

The 2017 Nobel prize in chemistry marked a new era for scientific research. Three scientists earned the honor with their introduction of cryo-electron microscopy—an instrument that delivers high-resolution imagery of molecule structures. With a better view of nucleic acids, proteins, and other biomolecules, new doors have opened for scientists to discover and develop new medications.

However, implementing cryo-electron microscopy isn’t without its challenges. Most notably, the instrument captures very large datasets that require unique considerations in terms of where they’re stored and how they’re used. The level of complexity and the distinct challenges cryo-EM presents requires the support of a highly experienced Bio-IT partner, like RCH, who are actively supporting large and emerging organizations’ with their cryo-EM implementation and management. But let’s jump into the basics first.

How Our Customers Are Using Cryo-Electron Microscopy

Cryo-electron microscopy (cryo-EM) is revolutionizing biology and chemistry. Our customers are using it to analyze the structures of proteins and other biomolecules with a greater degree of accuracy and speed compared to other methods.

In the past, scientists have used X-ray diffraction to get high-resolution images of molecules. But in order to receive these images, the molecules first need to be crystallized. This poses two problems: many proteins won’t crystallize at all. And in those that do, the crystallization can often change the structure of the molecule, which means the imagery won’t be accurate.

Cryo-EM provides a better alternative because it doesn’t require crystallization. What’s more, scientists can gain a clearer view of how molecules move and interact with each other—something that’s extremely hard to do using crystallization.

Cryo-EM can also study larger proteins, complexes of molecules, and membrane-bound receptors. Using NMR to achieve the same results is challenging, as nuclear magnetic resonance (NMR) is typically limited to smaller proteins.

Because cryo-EM can give such detailed, accurate images of biomolecules, its use is being explored in the field of drug discovery and development. However, given its $5 million price tag and complex data outputs, it’s essential for labs considering cryo-EM to first have the proper infrastructure in place, including expert support, to avoid it becoming a sunken cost.

The Challenges We’re Seeing With the Implementation of Cryo-EM

Introducing cryo-EM to your laboratory can bring excitement to your team and a wealth of potential to your organization. However, it’s not a decision to make lightly, nor is it one you should make without consultation with strategic vendors actively working in the cryo-EM space, like RCH

The biggest challenge labs face is the sheer amount of data they need to be prepared to manage. The instruments capture very large datasets that require ample storage, access controls, bandwidth, and the ability to organize and use the data.

The instruments themselves bear a high price tag, and adding the appropriate infrastructure increases that cost. The tools also require ongoing maintenance.

There’s also the consideration of upskilling your team to opera te and troubleshoot the cryo-EM equipment. Given the newness of the technology, most in-house teams simply don’t have all of the required skills to manage the multiple variables, nor are they likely to have much (or any) experience working on cryo-EM projects.

Biologists are no strangers to undergoing training, so consider this learning curve just a part of professional development. However, combined with learning how to operate the equipment AND make sense of the data you collect, it’s clear that the learning curve is quite steep. It may take more training and testing than the average implementation project to feel confident in using the equipment.

For these reasons and more, engaging a partner like RCH that can support your firm from the inception of its cryo-EM implementation ensures critical missteps are circumvented which ultimately creates more sustainable and future-proof workflows, discovery and outcomes.With the challenges properly addressed from the start, the promises that cryo-EM holds are worth the extra time and effort it takes to implement it.

How to Create a Foundational Infrastructure for Cryo-EM Technology

As you consider your options for introducing Cryo-EM technology, one of your priorities should be to create an ecosystem in which cryo-EM can thrive in a cloud-first, compute forward approach. Setting the stage for success, and ensuring you are bringing the compute to the data from inception, can help you reap the most rewards and use your investment wisely.

Here are some of the top considerations for your infrastructure:

- Network Bandwidth

One early study of cryo-EM found that each microscope outputs about 500 GB of data per day. Higher bandwidth can help streamline data processing by increasing download speeds so that data can be more quickly reviewed and used. - Proximity to and Capacity of Your Data Center

Cryo-EM databases are becoming more numerous and growing in size and scope. The largest data set in the Electron Microscopy Public Image Archive (EMPIAR) is 12.4TB, while the median data set is about 2TB. Researchers expect these massive data sets to become the norm for cryo-EM, which means you need to ensure your data center is prepared to handle a growing load of data. This applies to both cloud-first organizations and those with hybrid data storage models. - Integration with High-Performance Computing

Integrating high-performance computing (HPC) with your cryo-EM environment ensures you can take advantage of the scope and depth of the data created. Scientists will be churning through massive piles of data and turning them into 3D models, which will take exceptional computing power. - Having the Right Tools in Place

To use cryo-EM effectively, you’ll need to complement your instruments with other tools and software. For example, CryoSPARC is the most common software that’s purpose-built for cryo-EM technology. It has configured and optimized the workflows specifically for research and drug discovery. - Availability and Level of Expertise

Because cryo-EM is still relatively new, organizations must decide how to gain the expertise they need to use it to its full potential. This could take several different forms, including hiring consultants, investing in internal knowledge development, and tapping into online resources.

How RCH Solutions Can Help You Prepare for Cryo-EM

Implementing cryo-EM is an extensive and costly process, but laboratories can mitigate these and other challenges with the right guidance. It starts with knowing your options and taking all costs and possibilities into account.

Cryo-EM is the new frontier in drug discovery, and RCH Solutions is here to help you remain on the cutting edge of it. We provide tactical and strategic support in developing a cryo-EM infrastructure that will help you generate a return on investment.

Contact us today to learn more.

Sources:

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7096719/

https://www.nature.com/articles/d41586-020-00341-9

https://www.gatan.com/techniques/cryo-em

https://www.chemistryworld.com/news/explainer-what-is-cryo-electron-microscopy/3008091.article

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6067001/

Thoughts from our CEO, Michael Riener

Now more than ever, creating a culture that encompasses all of the elements necessary to ensure employee, customer and organizational success and exceptional outcomes is critical. Why? Our industry is more challenged than ever with talent acquisition, development and retention, not to mention the sky-high demands for services and support and the impact this has on our team members.

As industry and organizational leaders, we need to meet these challenges with strong calls to actions and initiatives to create change.

For this reason and more, hiring the right people who share these core values, and building a culture around a team that embraces the RCH Solutions DNA is paramount, because it’s our opportunity to get things right from inception, for the organization and our team members, and lead with our non-negotiable core values.

The Current Challenges

The Biopharma, Biotech and Life Sciences industries are experiencing record burnout. Most recently, the pharmaceuticals, biotechnology and life sciences category fell almost 3 points (2.9) between the first and second quarter of 2022, per corporate reputation monitor, RepTrak, and as outlined in the article linked below. But what does this actually mean? This near real time measure and its decline further solidifies the challenges that exist both internally and externally for stakeholders and organizations, which if not met with actions and initiatives, could have devastating impacts.

The Supporting Solutions

But how can we realistically form meaningful actions to improve our position and outcomes? Lean back into or overhaul your company values. Too often companies are reactive and wait to fix and troubleshoot when a problem exists. Why not proactively set our team members up for success from the beginning? By taking care of our teams and nurturing their personal and professional growth and experience, we can in turn, empower them to deliver their best for our organization and customers.

What We’re Doing at RCH

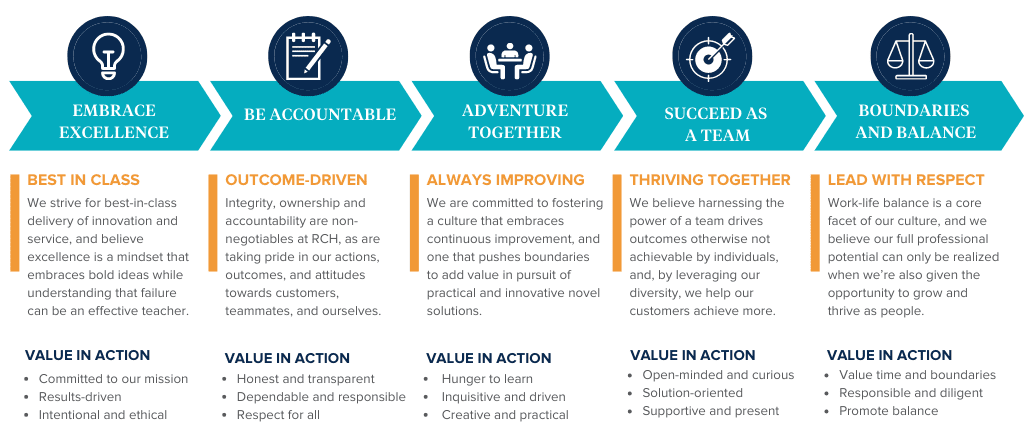

At RCH, our core values are in place to do just that, and are far more than just words — they unwaveringly represent the threads that weave together the fabric of our culture. And we truly mean it, expect it and live and breathe it. As an organization we:

- Embrace Excellence

- Expect Accountability

- Adventure Together

- Succeed as a Team

- Respect Boundaries & Balance

But what do these really mean and what do they look like? To extend upon our core values, and to illustrate how these are measured and look in action, see the infographic below.

These core values are also consistently reinforced by our customers in recognition of specific team members, and we use these foundational principles for just about everything. From serving as a guide when interviewing new team members to a barometer when evaluating our performance as individuals and teams, and even when deciding which customers to work with. Our values embody the behaviors upon which we measure our success and create a framework for our growth as people and professionals.

I am very confident that our teams feel these values in action daily at RCH. And their commitment and buy-in to these beliefs are evident and flow through every interaction we have and the outcomes we deliver collectively, both internally and externally. And that matters to us and it should to you too.

In Life Sciences, and medical fields in particular, there is a premium on expertise and the role of a specialist. When it comes to scientists, researchers, and doctors, even a single high-performer who brings advanced knowledge in their field often contributes more value than a few average generalists who may only have peripheral knowledge. Despite this premium placed on specialization or top-talent as an industry norm, many life science organizations don’t always follow the same measure when sourcing vendors or partners, particularly those in the IT space.

And that’s a mis-step. Here’s why.

Why “A” Talent Matters

I’ve seen far too many organizations that had, or still have, the above strategy, and also many that focus on acquiring and retaining top talent. The difference? The former experienced slow adoption which stalled outcomes which often had major impacts to their short and long term objectives. The latter propelled their outcomes out of the gates, circumventing cripping mistakes along the way. For this reason and more, I’m a big believer in attracting and retaining only “A” talent. The best talent and the top performers (Quality) will always outshine and out deliver a bunch of average ones. Most often, those individuals are inherently motivated and engaged, and when put in an environment where their skills are both nurtured and challenged, they thrive.

Why Expertise Prevails

While low-cost IT service providers with deep rosters may similarly be able to throw a greater number of people at problems, than their smaller, boutique counterparts, often the outcome is simply more people and more problems. Instead, life science teams should aim to follow their R&D talent acquisition processes and focus on value and what it will take to achieve the best outcomes in this space. Most often, it’s not about quantity of support/advice/execution resources—but about quality.

Why Our Customers Choose RCH

Our customers are like minded and also employ top talent, which is why they value RCH—we consistently service them with the best. While some organizations feel that throwing bodies (Quantity) at a problem is one answer, often one for optics, RCH does not. We never have. Sometimes you can get by with a generalist, however, in our industry, we have found that our customers require and deserve specialists. The outcomes are more successful. The results are what they seek— Seamless transformation.

In most cases, we are engaged with a customer who has employed the services of a very large professional services or system integration firm. Increasingly, those customers are turning to RCH to deliver on projects typically reserved for those large, expensive, process-laden companies. The reason is simple. There is much to be said for a focused, agile and proven company.

Why Many Firms Don’t Restrategize

So why do organizations continue to complain but rely on companies such as these? The answer has become clear—risk aversion. But the outcomes of that reliance are typically just increased costs, missed deadlines or major strategic adjustments later on – or all of the above. But why not choose an alternative strategy from inception? I’m not suggesting turning over all business to a smaller organization. But, how about a few? How about those that require proven focus, expertise and the track record of delivery? I wrote a piece last year on the risk of mistaking “static for safe,” and stifling innovation in the process. The message still holds true.

We all know that scientific research is well on its way to becoming, if not already, a multi-disciplinary, highly technical process that requires diverse and cross functional teams to work together in new ways. Engaging a quality Scientific Computing partner that matches that expertise with only “A” talent, with the specialized skills, service model and experience to meet research needs can be a difference-maker in the success of a firm’s research initiatives.

My take? Quality trumps quantity—always in all ways. Choose a scientific computing partner whose services reflect the specialized IT needs of your scientific initiatives and can deliver robust, consistent results. Get in touch with me below to learn more.

If You’re Not Doing These Five Things to Improve Research Outcomes, Start Now

Effective research and development programs are still one of the most significant investments of any biopharma. In fact, Seed Scientific estimates that the current global scientific research market is worth $76 billion, including $33.44 billion in the United States alone. Despite the incredible advancements in technology now aiding scientific discovery, it’s still difficult for many organizations to effectively bridge the gap between the business and IT, and fully leverage innovation to drive the most value out of their R&D product.

If you’re in charge of your organization’s R&D IT efforts, following tried and true best practices may help. Start with these five strategies to help the business you’re supporting drive better research outcomes.

Tip #1: Practice Active Listening

Instead of jumping to a response when presented with a business challenge, start by listening to research teams and other stakeholders and learning more about their experiences and needs. The process of active listening, which involves asking questions to create a more comprehensive understanding of the issue at hand, can lead to new avenues of inspiration and help internal IT organizations better understand the challenges and opportunities before them.

Tip #2: Plan Backwards

Proper planning is a must for most scientific computing initiatives. But one particularly interesting method for accomplishing an important goal, such as moving workloads to the Cloud or optimizing your applications and workflows for global collaboration, is to start with a premortem. During this brainstorming session, team members and other stakeholders can predict possible challenges and other roadblocks and ideate viable solutions before any work begins. Research by Harvard Business Review shows this process can improve the identification of the underlying causes of issues by 30% and ultimately help drive better project and research outcomes.

Tip #3: Consider the Process, Not Just the Solution

Research scientists know all too well that discovering a viable solution is merely the beginning of a long journey to market. It serves R&D IT teams well to consider the same when developing and implementing platform solutions for business needs. Whether a system within a compute environment needs to be maintained, upgraded, or retired, R&D IT teams must prepare to adjust their approach based on the business’ goals, which may shift as a project progresses. Therefore, maintaining a flexible and agile approach throughout the project process is critical.

Tip #4: Build a Specialized R&D IT Team

Any member of an IT team working in support of the unique scientific computing needs of the business (as opposed to more common enterprise IT efforts) should possess both knowledge and experience in the specific tools, applications, and opportunities within scientific research and discovery. Moreover, they should have the skills to quickly identify and shift to the most important priorities as they evolve and adapt to new methods and initiatives that support improved research outcomes. If you don’t have these resources on staff, consider working with a specialized scientific computing partner to bridge this gap.

Tip #5: Prepare for the Unexpected

In research compute, it’s not enough to have a fall-back plan—you need a back-out plan as well. Being able to pivot quickly and respond appropriately to an unforeseen challenge or opportunity is mission-critical. Better yet, following best practices that mitigate risk and enable contingency planning from the start (like implementing an infrastructure-as-code protocol), can help the business you’re supporting avoid crippling delays in their research process.

While this isn’t an exhaustive list, these five strategies provide an immediate blueprint to improve collaboration between R&D IT teams and the business, and support better research outcomes through smarter scientific computing. But if you’re looking for more support, RCH Solutions’ specialized Sci-T Managed Services could be the answer. Learn more about his specialized service, here.

Attention R&D IT decision makers:

If you’re expecting different results in 2022 despite relying on the same IT vendors and rigid support model that didn’t quite get you to your goal last year, it may be time to hit pause on your plan.

At RCH, we’ve spent the past 30+ years paying close attention to what works — and what doesn’t—while providing specialty scientific computing and research IT support exclusively in the Life Sciences. We’ve put together this list of must-do best practices that you, and especially your external IT partner, should move to the center of your strategy to help you to take charge of your R&D IT roadmap.

And if your partners are not giving you this advice to get your project back track? Well, it may be time to find a new one.

1. Ground Your Plan in Reality

In the high-stakes and often-demanding environment of R&D IT, the tendency to move toward solutioning before fully exposing and diagnosing the full extent of the issue or opportunity is very common. However, this approach is not only ineffective, it’s also expensive. Only when your strategy and plan is created to account for where you are today — not where you’d like to be today — can you be confident that it will take you where you want to go. Otherwise, you’ll be taking two steps forward, and one (or more) step back the entire time.

2. Start with Good Data

Research IT professionals are often asked to support a wide range of data-related projects. But the reality is, scientists can’t use data to drive good insights, if they can’t find or make sense of the data in the first place. Implementing FAIR data practices should be the centerprise of any scientific computing strategy. Once you see the full scope of your data needs, only then can you deliver on high-value projects, such as analytics or visualization.

3. Make “Fit-For-Purpose” Your Mantra

Research is never a one size fits all process. Though variables may be consistent based on the parameters of your organization and what has worked well in the past, viewing each challenge as unique affords you the opportunity to leverage best-of-breed design patterns and technologies to answer your needs. Therefore, resist the urge to force a solution, even one that has worked well in other instances, into a framework if it’s not the optimal solution, and opt for a more strategic and tailored approach.

4. Be Clear On the Real Source of Risk

Risk exists in virtually all industries, but in a highly regulated environment, the concept of mitigating risk is ubiquitous, and for good reason. When the integrity of data or processes drives outcomes that can actually influence life or death, accuracy is not only everything, it’s the only thing. And so the tendency is to go with what you know. But ask yourself this: does your effort to minimize risk stifle innovation? In a business built on boundary-breaking innovation, mistaking static for “safe” can be costly. Identifying which projects, processes and/or workloads would be better managed by other, more specialized service providers may actually reduce risk by improving project outcomes.

Reaching Your R&D IT Goals in 2022: A Final Thought

Never substitute experience.

Often, the strategy that leads to many effective scientific and technical computing initiatives within an R&D IT framework differs from a traditional enterprise IT model. And that’s ok because, just as often, the goals do as well. That’s why it is so important to leverage the expertise of R&D IT professionals highly specialized and experienced in this niche space.

Experience takes time to develop. It’s not simply knowing what solutions work or don’t, but rather understanding the types of solutions or solution paths that are optimal for a particular goal, because, well—‘been there done that. It’s having the ability to project potential outcomes, in order to influence priorities and workflows. And ultimately, it’s knowing how to find the best design patterns.

It’s this level of specialization — focused expertise combined with real, hands-on experience — that can make all the difference in your ability to realize your outcome.

And if you’re still on the fence about that, just take a look at some of these case studies to see how it’s working for others.

Bio-IT Teams Must Focus on Five Major Areas in Order to Improve Efficiency and Outcomes

Life Science organizations need to collect, maintain, and analyze a large amount of data in order to achieve research outcomes. The need to develop efficient, compliant data management solutions is growing throughout the Life Science industry, but Bio-IT leaders face diverse challenges to optimization.

These challenges are increasingly becoming obstacles to Life Science teams, where data accessibility is crucial for gaining analytic insight. We’ve identified five main areas where data management challenges are holding these teams back from developing life-saving drugs and treatments.

Five Data Management Challenges for Life Science Firms

Many of the popular applications that Life Science organizations use to manage regulated data are not designed specifically for the Life Science industry. This is one of the main reasons why Life Science teams are facing data management and compliance challenges. Many of these challenges stem from the implementation of technologies not well-suited to meet the demands of science.

Here, we’ve identified five areas where improvements in data management can help drive efficiency and reliability.

1. Manual Compliance Processes

Some Life Sciences teams and their Bio-IT partners are dedicated to leveraging software to automate tedious compliance-related tasks. These include creating audit trails, monitoring for personally identifiable information, and classifying large volumes of documents and data in ways that keep pace with the internal speed of science.

However, many Life Sciences firms remain outside of this trend towards compliance automation. Instead, they perform compliance operations manually, which creates friction when collaborating with partners and drags down the team’s ability to meet regulatory scrutiny.

Automation can become a key value-generating asset in the Life Science development process. When properly implemented and subjected to a coherent, purpose-built data governance structure, it improves data accessibility without sacrificing quality, security, or retention.

2. Data Security and Integrity

The Life Science industry needs to be able to protect electronic information from unauthorized access. At the same time, certain data must be available to authorized third parties when needed. Balancing these two crucial demands is an ongoing challenge for Life Science and Bio-IT teams.

When data is scattered across multiple repositories and management has little visibility into the data lifecycle, striking that key balance becomes difficult. Determining who should have access to data and how permission to that data should be assigned takes on new levels of complexity as the organization grows.

Life Science organizations need to implement robust security frameworks that minimize the exposure of sensitive data to unauthorized users. This requires core security services that include continuous user analysis, threat intelligence, and vulnerability assessments, on top of a Master Data Management (MDM) based data infrastructure that enables secure encryption and permissioning of sensitive data, including intellectual properties.

3. Scalable, FAIR Data Principles

Life Science organizations increasingly operate like big data enterprises. They generate large amounts of data from multiple sources and use emerging technologies like artificial intelligence to analyze that data. Where an enterprise may source its data from customers, applications, and third-party systems, Life Science teams get theirs from clinical studies, lab equipment, and drug development experiments.

The challenge that most Life Science organizations face is the management of this data in organizational silos. This impacts the team’s ability to access, analyze, and categorize the data appropriately. It also makes reproducing experimental results much more difficult and time-consuming than it needs to be.

The solution to this challenge involves implementing FAIR data principles in a secure, scalable way. The FAIR data management system relies on four main characteristics:

Findability. In order to be useful, data must be findable. This means it must be indexed according to terms that IT teams, scientists, auditors, and other stakeholders are likely to search for. It may also mean implementing a Master Data Management (MDM) or metadata-based solution for managing high-volume data.

Accessibility. It’s not enough to simply find data. Authorized users must also be able to access it, and easily. When thinking about accessibility—while clearly related to security and compliance, including proper provisioning, permissions, and authentication—ease of access and speed can be a difference-maker, which leads to our next point.

Interoperability. When data is formatted in multiple different ways, it falls on users to navigate complex workarounds to derive value from it. If certain users don’t have the technical skills to immediately use data, they will have to wait for the appropriate expertise from a Bio-IT team member, which will drag down overall productivity.

Reusability. Reproducibility is a serious and growing concern among Life Science professionals. Data reusability plays an important role in ensuring experimental insights can be reproduced by independent teams around the world. This can be achieved through containerization technologies that establish a fixed environment for experimental data.

4. Data Management Solutions

The way your Life Sciences team stores and shares data is an integral component of your organization’s overall productivity and flexibility. Organizational silos create bottlenecks that become obstacles to scientific advancement, while robust, accessible data storage platforms enable on-demand analysis that improves time-to-value for various applications.

The three major categories of storage solutions are Cloud, on-premises, and hybrid systems. Each of these presents a unique set of advantages and disadvantages, which serve specific organizational goals based on existing infrastructure and support. Organizations should approach this decision with their unique structure and goals in mind.

Life Science firms that implement MDM strategy are able to take important steps towards storing their data while improving security and compliance. MDM provides a single reference point for Life Science data, as well as a framework for enacting meaningful cybersecurity policies that prevent unauthorized access while encouraging secure collaboration.

MDM solutions exist as Cloud-based software-as-a-service licenses, on-premises hardware, and hybrid deployments. Biopharma executives and scientists will need to choose an implementation approach that fits within their projected scope and budget for driving transformational data management in the organization.

Without an MDM strategy in place, Bio-IT teams must expend a great deal of time and effort to organize data effectively. This can be done through a data fabric-based approach, but only if the organization is willing to leverage more resources towards developing a robust universal IT framework.

5. Monetization

Many Life Science teams don’t adequately monetize data due to compliance and quality control concerns. This is especially true of Life Science teams that still use paper-based quality management systems, as they cannot easily identify the data that they have – much less the value of the insights and analytics it makes possible.

This becomes an even greater challenge when data is scattered throughout multiple repositories, and Bio-IT teams have little visibility into the data lifecycle. There is no easy method to collect these data for monetization or engage potential partners towards commercializing data in a compliant way.

Life Science organizations can monetize data through a wide range of potential partnerships. Organizations to which you may be able to offer high-quality data include:

- Healthcare providers and their partners

- Academic and research institutes

- Health insurers and payer intermediaries

- Patient engagement and solution providers

- Other pharmaceutical research organizations

- Medical device manufacturers and suppliers

In order to do this, you will have to assess the value of your data and provide an accurate estimate of the volume of data you can provide. As with any commercial good, you will need to demonstrate the value of the data you plan on selling and ensure the transaction falls within the regulatory framework of the jurisdiction you do business in.

Overcome These Challenges Through Digital Transformation

Life Science teams who choose the right vendor for digitizing compliance processes are able to overcome these barriers to implementation. Vendors who specialize in Life Sciences can develop compliance-ready solutions designed to meet the incredibly unique needs of science, making fast, efficient transformation a possibility.

RCH Solutions can help teams like yours capitalize on the data your Life Science team generates and give you the competitive advantage you need to make valuable discoveries. Rely on our help to streamline workflows, secure sensitive data, and improve Life Sciences outcomes.

RCH Solutions is a global provider of computational science expertise, helping Life Sciences and Healthcare firms of all sizes clear the path to discovery for nearly 30 years. If you’re interested in learning how RCH can support your goals, get in touch with us here.