The Power of AWS Certifications in Cloud Strategy: Unleashing Expertise for Success

Life Sciences organizations engaged in drug discovery, development, and commercialization grapple with intricate challenges. The quest for novel therapeutics demands extensive research, vast datasets, and the integration of multifaceted processes. Managing and analyzing this wealth of data, ensuring compliance with stringent regulations, and streamlining collaboration across global teams are hurdles that demand innovative solutions.

Moreover, the timeline from initial discovery to commercialization is often lengthy, consuming precious time and resources. To overcome these challenges and stay competitive, Life Sciences organizations must harness cutting-edge technologies, optimize data workflows, and maintain compliance without compromise.

Amid these complexities, Amazon Web Services (AWS) emerges as a game-changing ally. AWS’s industry-leading cloud platform includes specialized services tailored to the unique needs of Life Sciences and empowers organizations to:

- Accelerate Research: AWS’s scalable infrastructure facilitates high-performance computing (HPC), enabling faster data analysis, molecular modeling, and genomics research. This acceleration is pivotal in expediting drug discovery.

- Enhance Data Management: With AWS, Life Sciences organizations can store, process, and analyze massive datasets securely. AWS’s data management solutions ensure data integrity, compliance, and accessibility.

- Optimize Collaboration: AWS provides the tools and environment for seamless collaboration among dispersed research teams. Researchers can collaborate in real time, enhancing efficiency and innovation.

- Ensure Security and Compliance: AWS offers robust security measures and compliance certifications specific to the Life Sciences industry, ensuring that sensitive data is protected and regulatory requirements are met.

While AWS holds immense potential, realizing its benefits requires expertise. This is where a trusted AWS partner becomes invaluable. An experienced partner not only understands the intricacies of AWS but also comprehends the unique challenges Life Sciences organizations face.

Partnering with a trusted AWS expert offers:

- Strategic Guidance: A seasoned partner can tailor AWS solutions to align with the Life Sciences sector’s specific goals and regulatory constraints, ensuring a seamless fit.

- Efficient Implementation: AWS experts can expedite the deployment of Cloud solutions, minimizing downtime and maximizing productivity.

- Ongoing Support: Beyond implementation, a trusted partner offers continuous support, ensuring that AWS solutions evolve with the organization’s needs.

- Compliance Assurance: With deep knowledge of industry regulations, a trusted partner can help navigate the compliance landscape, reducing risk and ensuring adherence.

Certified AWS engineers bring transformative expertise to cloud strategy and data architecture, propelling organizations toward unprecedented success.

AWS Certifications: What They Mean for Organizations

AWS offers a comprehensive suite of globally recognized certifications, each representing a distinct level of proficiency in managing AWS Cloud technologies. These certifications are not just badges; they signify a commitment to excellence and a deep understanding of Cloud infrastructure.

In fact, studies show that professionals who pursue AWS certification are faster, more productive troubleshooters than non-certified employees. For research and development IT teams, the AWS certifications held by their members translate into powerful advantages. These certifications unlock the ability to harness AWS’s cloud capabilities for driving innovation, efficiency, and cost-effectiveness in data-driven processes.

Meet RCH’s Certified AWS Experts: Your Key to Advanced Proficiency

At RCH, we’re proud to prioritize professional and technical skill development across our team, and proudly recognize our AWS-certified professionals:

- Mohammad Taaha, AWS Solutions Architect Professional

- Yogesh Phulke, AWS Solutions Architect Professional

- Michael Moore, AWS DevOps Engineering Professional

- Abdul Samad, AWS Solutions Architect Associate

- Baris Bilgin, AWS Solutions Architect Associate

- Isaac Adanyeguh, AWS Solutions Architect Associate

- Matthew Jaeger, AWS Cloud Practitioner & SysOps Administrator

- Lyndsay Frank, AWS Cloud Practitioner

- Dennis Runner, AWS Cloud Practitioner

- Burcu Dikeç, AWS Cloud Practitioner

When you partner with RCH and our AWS-certified experts, you gain access to technical knowledge and tap into a wealth of experience, innovation, and problem-solving capabilities. Advanced proficiency in AWS certifications means that our team can tackle even the most complex Cloud challenges with confidence and precision.

Our certified AWS experts don’t just deploy Cloud solutions; they architect them with your unique business needs in mind. They optimize for efficiency, scalability, and cost-effectiveness, ensuring your Cloud strategy aligns seamlessly with your organizational goals, including many of the following needs:

- Creating extensive solutions for AWS EC2 with multiple frameworks (EBS, ELB, SSL, Security Groups and IAM), as well as RDS, CloudFormation, Route 53, CloudWatch, CloudFront, CloudTrail, S3, Glue, and Direct Connect.

- Deploying high-performance computing (HPC) clusters on AWS using Parallel Cluster running the SGE scheduler

- Automating operational tasks, including software configuration, server scaling and deployments, and database setups in multiple AWS Cloud environments using modern application and configuration management tools (e.g., CloudFormation and Ansible).

- Working closely with clients to design networks, systems, and storage environments that effectively reflect their business needs, security, and service level requirements.

- Architecting and migrating data from on-premises solutions (Isilon) to AWS (S3 & Glacier) using industry-standard tools (Storage Gateway, Snowball, CLI tools, Datasync, among others).

- Designing and deploying plans to remediate accounts affected by IP overlap

All of these tasks have boosted the efficiency of data-oriented processes for clients and made them better able to capitalize on new technologies and workflows.

The Value of Working with AWS Certified Partners

In an era where data and technology are the cornerstones of success, working with a partner who embodies advanced proficiency in AWS is not just a strategic choice—it’s a game-changing move. At RCH Solutions, we leverage the power of AWS certifications to propel your organization toward unparalleled success in the cloud landscape.

Learn how RCH can support your Cloud strategy, or CloudOps needs today.

Create cutting-edge data architecture for highly specialized Life Sciences

Data pipelines are simple to understand: they’re systems or channels that allow data to flow from one point to another in a structured manner. But structuring them for complex use cases in the field of genomics is anything but simple.

Genomics relies heavily on data pipelines to process and analyze large volumes of genomic data efficiently and accurately. Given the vast amount of details involving DNA and RNA sequencing, researchers require robust genomics pipelines that can process, analyze, store, and retrieve data on demand.

It’s essential to build genomics pipelines that serve the various functions of genomics research and optimize them to conduct accurate and efficient research faster than the competition. Here’s how RCH is helping your competitors implement and optimize their genomics data pipelines, along with some best practices to keep in mind throughout the process.

Early-stage steps for implementing a genomics data pipeline

Whether you’re creating a new data pipeline for your start-up or streamlining existing data processes, your entire organization will benefit from laying a few key pieces of groundwork first. These decisions will influence all other decisions you make regarding hosting, storage, hardware, software, and a myriad of other details.

Defining the problem and data requirements

All data-driven organizations, and especially the Life Sciences, need the ability to move data and turn them into actionable insights as quickly as possible. For organizations with legacy infrastructures, defining the problems is a little easier since you have more insight into your needs. For startups, a “problem” might not exist, but a need certainly does. You have goals for business growth and the transformation of society at large, starting with one analysis at a time. So, start by reviewing your projects and goals with the following questions:

- What do your workflows look like?

- How does data move from one source to another?

- How will you input information into your various systems?

- How will you use the data to reach conclusions or generate more data?

Leaning into your projects and goals and the outcomes of the above questions in the planning phase will lead to an architecture that will be laid out to deliver the most efficient results based on how you work. The answers to the above questions (and others) will also reveal more about your data requirements, including storage capacity and processing power, so your team can make informed and sustainable decisions.

Data collection and storage

The Cloud has revolutionized the way Life Sciences companies collect and store data. AWS Cloud computing creates scalable solutions, allowing companies to add or remove space as business dictates. Many companies still use on-premise servers, while others are using a hybrid mix.

Part of the decision-making process may involve compliance with HIPAA, GDPR, the Genetics Diagnostics Act, and other data privacy laws. Some regulations may prohibit the use of public Cloud computing. Decision-makers will need to consider every angle, every pro, and every con to each solution to ensure efficiency without sacrificing compliance.

Data cleaning and preprocessing

Raw sequencing data often contains noise, errors, and artifacts that need to be corrected before downstream analysis. Pre-processing involves tasks like trimming, quality filtering, and error correction to enhance data quality. This helps maintain the integrity of the pipeline while improving outputs.

Raw sequencing data often contains noise, errors, and artifacts that need to be corrected before downstream analysis. Pre-processing involves tasks like trimming, quality filtering, and error correction to enhance data quality. This helps maintain the integrity of the pipeline while improving outputs.

Data movement

Generated data typically writes to local storage and is then moved elsewhere, such as the Cloud or network-attached storage (NAS). This gives companies more capacity, plus it’s cheaper. It also frees up local storage for instruments which is usually limited.

The timeframe when the data gets moved should also be considered. For example, does the data get moved at the end of a run or as the data is generated? Do only successful runs get moved? The data format can also change. For example, the file format required for downstream analyses may require transformation prior to ingestion and analysis. Typically, raw data is read-only and retained. Future analyses (any transformations or changes) would be performed on a copy of that data.

Data disposal

What happens to unsuccessful run data? Where does the data go? Will you get an alert? Not all data needs to be retained, but you’ll need to specify what happens to data that doesn’t successfully complete its run.

Organizations should also consider upkeep and administration. Someone should be in charge of responding to failed data runs as well as figuring out what may have gone wrong. Some options include adding a system response, isolating the “bad” data to avoid bottlenecks, logging the alerts, and identifying and fixing root causes.

Data analysis and visualization

Visualizations can help speed up analysis and insights. Users can gain clear-cut answers from data charts and other visual elements and take decisive action faster than reading reports. Define what these visuals should look like and the data they should contain.

Location for the compute

Where the compute is located for cleaning, preprocessing, downstream analysis, and visualization is also important. The closer the data is to the computing source, the shorter distance it has to travel, which translates into faster data processing.

Optimization techniques for genomics data pipelines

Establishing a scalable architecture is just the start. As technology improves and evolves, opportunities to optimize your genomic data pipeline become available. Some of the optimization techniques we apply include:

Parallel processing and distributed computing

Parallel processing involves breaking down a large task into smaller sub-tasks which can happen simultaneously on different processors or cores within a single computer system. The workload is divided into independent parts, allowing for faster computation times and increased productivity.

Distributed computing is similar, but involves breaking down a large task into smaller sub-tasks that are executed across multiple computer systems connected to one another via a network. This allows for more efficient use of resources by dividing the workload among several computers.

Cloud computing and serverless architectures

Cloud computing uses remote servers hosted on the internet to store, manage, and process data instead of relying on local servers or personal computers. A form of this is serverless architecture, which allows developers to build and run applications without having to manage infrastructure or resources.

Containerization and orchestration tools

Containerization is the process of packaging an application, along with its dependencies and configuration files, into a lightweight “container” that can easily deploy across different environments. It abstracts away infrastructure details and provides consistency across different platforms.

Containerization also helps with reproducibility. Users can expect better performance if the computer is in close proximity to the data. It can also be optimized for longer-term data retention by moving data to a cheaper storage area when feasible.

Orchestration tools manage and automate the deployment, scaling, and monitoring of containerized applications. These tools provide a centralized interface for managing clusters of containers running on multiple hosts or cloud providers. They offer features like load balancing, auto-scaling, service discovery, health checks, and rolling updates to ensure high availability and reliability.

Caching and data storage optimization

We explore a variety of data optimization techniques, including compression, deduplication, and tiered storage, to speed up retrieval and processing. Caching also enables faster retrieval of data that is frequently used. It’s readily available in the cache memory instead of being pulled from the original source. This reduces response times and minimizes resource usage.

Best practices for data pipeline management in genomics

As genomics research becomes increasingly complex and capable of processing more and different types of data, it is essential to manage and optimize the data pipeline efficiently to create accurate and reproducible results. Here are some best practices for data pipeline management in genomics.

- Maintain proper documentation and version control. A data pipeline without proper documentation can be difficult to understand, reproduce, and maintain over time. When multiple versions of a pipeline exist with varying parameters or steps, it can be challenging to identify which pipeline version was used for a particular analysis. Documentation in genomics data pipelines should include detailed descriptions of each step and parameter used in the pipeline. This helps users understand how the pipeline works and provides context for interpreting the results obtained from it.

- Test and validate pipelines routinely. The sheer complexity of genomics data requires careful and ongoing testing and validation to ensure the accuracy of the data. This data is inherently noisy and may contain errors which will affect downstream processes.

- Continuously integrate and deploy data. Data is only as good as its accessibility. Constantly integrating and deploying data ensures that more data is readily usable by research teams.

- Consider collaboration and communication among team members. The data pipeline architecture affects the way teams send, share, access, and contribute to data. Think about the user experience and seek ways to create intuitive controls that improve productivity.

Start Building Your Genomics Data Pipeline with RCH Solutions

About 1 in 10 people (or 30 million) in the United States suffer from a rare disease, and in many cases, only special analyses can detect them and give patients the definitive answers they seek. These factors underscore the importance of genomics and the need to further streamline processes that can lead to significant breakthroughs and accelerated discovery.

But implementing and optimizing data pipelines in genomics research shouldn’t be treated as an afterthought. Working with a reputable Bio-IT provider that specializes in the complexities of Life Sciences gives Biopharmas the best path forward and can help build and manage a sound and extensible scientific computing environment, that supports your goals and objectives, now and into the future. RCH Solutions understands the unique requirements of data processing in the context of genomics and how to implement data pipelines today while optimizing them for future developments.

Let’s move humanity forward together — get in touch with our team today.

Sources

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5580401/

https://www.seagate.com/blog/what-is-nas-master-ti/

https://greatexpectations.io/blog/data-tests-failed-now-what

Discover the differences between the two and pave the way toward improved efficiency.

Life sciences organizations process more data than the average company—and need to do so as quickly as possible. As the world becomes more digital, technology has given rise to two popular computing models: Cloud computing and edge computing. Both of these technologies have their unique strengths and weaknesses, and understanding the difference between them is crucial for optimizing your science IT infrastructure now and into the future.

The Basics

Cloud computing refers to a model of delivering on-demand computing resources over the internet. The Cloud allows users to access data, applications, and services from anywhere in the world without expensive hardware or software investments.

Edge computing, on the other hand, involves processing data at or near its source instead of sending it back to a centralized location, such as a Cloud server.

Now, let’s explore the differences between Cloud vs. edge computing as they apply to Life Sciences and how to use these learnings to formulate and better inform your computing strategy.

Performance and Speed

One of the major advantages of edge computing over Cloud computing is speed. With edge computing, data processing occurs locally on devices rather than being sent to remote servers for processing. This reduces latency issues significantly, as data doesn’t have to travel back and forth between devices and Cloud servers. The time taken to analyze critical data is quicker with edge computing since it occurs at or near its source without having to wait for it to be transmitted over distances. This can be critical in applications like real-time monitoring, autonomous vehicles, or robotics.

Cloud computing, on the other hand, offers greater processing power and scalability, which can be beneficial for large-scale data analysis and processing. By providing on-demand access to shared resources, Cloud computing offers organizations greater processing power, scalability, and flexibility to run their applications and services. Cloud platforms offer virtually unlimited storage space and processing capabilities that can be easily scaled up or down based on demand. Businesses can run complex applications with high computing requirements without having to invest in expensive hardware or infrastructure. Also worth noting is that Cloud providers offer a range of tools and services for managing data storage, security, and analytics at scale—something edge devices cannot match.

Security and Privacy

With edge computing, there could be a greater risk of data loss if damage were to occur to local servers. Data loss is naturally less of a threat with Cloud storage, but there is a greater possibility of cybersecurity threats in the Cloud. Cloud computing is also under heavier scrutiny when it comes to collecting personal identifying information, such as patient data from clinical trials.

A top priority for security in both edge and Cloud computing is to protect sensitive information from unauthorized access or disclosure. One way to do this is to implement strong encryption techniques that ensure data is only accessible by authorized users. Role-based permissions and multi-factor authentication create strict access control measures, plus they can help achieve compliance with relevant regulations, such as GDPR or HIPAA.

Organizations should carefully consider their specific use cases and implement appropriate security and privacy controls, regardless of their elected computing strategy.

Scalability and Flexibility

Scalability and flexibility are both critical considerations in relation to an organization’s short and long-term discovery goals and objectives.

The scalability of Cloud computing has been well documented. Data capacity can easily be scaled up or down on demand, depending on business needs. Organizations can quickly scale horizontally too, as adding new devices or resources as you grow takes very little configuration and leverages existing Cloud capacities.

While edge devices are becoming increasingly powerful, they still have limitations in terms of memory and processing power. Certain applications may struggle to run efficiently on edge devices, particularly those that require complex algorithms or high-speed data transfer.

While edge devices are becoming increasingly powerful, they still have limitations in terms of memory and processing power. Certain applications may struggle to run efficiently on edge devices, particularly those that require complex algorithms or high-speed data transfer.

Another challenge with scaling up edge computing is ensuring efficient communication between devices. As more and more devices are added to an edge network, it becomes increasingly difficult to manage traffic flow and ensure that each device receives the information it needs in a timely manner.

Cost-Effectiveness

Both edge and Cloud computing have unique cost management challenges—and opportunities— that require different approaches.

Edge computing can be cost-effective, particularly for environments where high-speed internet is unreliable or unavailable. Edge computing cost management requires careful planning and optimization of resources, including hardware, software, device and network maintenance, and network connectivity.

In general, it’s less expensive to set up a Cloud-based environment, especially for firms with multiple offices or locations. This way, all locations can share the same resources instead of setting up individual on-premise computing environments. However, Cloud computing requires careful and effective management of infrastructure costs, such as computing, storage, and network resources to maintain speed and uptime.

Decision Time: Edge Computing or Cloud Computing for Life Sciences?

Both Cloud and edge computing offer powerful, speedy options for Life Sciences, along with the capacity to process high volumes of data without losing productivity. Edge computing may hold an advantage over the Cloud in terms of speed and power since data doesn’t have to travel far, but the cost savings that come with the Cloud can help organizations do more with their resources.

As far as choosing a solution, it’s not always a matter of one being better than the other. Rather, it’s about leveraging the best qualities of each for an optimized environment, based on your firm’s unique short- and long-term goals and objectives. So, if you’re ready to review your current computing infrastructure or prepare for a transition, and need support from a specialized team of edge and Cloud computing experts, get in touch with our team today.

About RCH Solutions

RCH Solutions supports Global, Startup, and Emerging Biotech and Pharma organizations with edge and Cloud computing solutions that uniquely align to discovery goals and business objectives.

Sources:

https://aws.amazon.com/what-is-cloud-computing/

https://www.ibm.com/topics/cloud-computing

https://www.ibm.com/cloud/what-is-edge-computing

https://www.techtarget.com/searchdatacenter/definition/edge-computing?Offer=abMeterCharCount_var1

https://thenewstack.io/edge-computing/edge-computing-vs-cloud-computing/

High-Performance Computing (HPC) has long been an incredible accelerant in the race to discover and develop novel drugs and therapies for both new and well-known diseases. And a HPC migration to the Cloud might be your next step to maintain or grow your organization’s competitive advantage.

Whether it’s a full HPC migration to the Cloud or a uniquely architected hybrid approach, evolving your HPC ecosystem to the Cloud brings critical advantages and benefits including:

- Flexibility and scalability

- Optimized costs

- Enhanced security

- Compliance

- Backup, recovery, and failover

- Simplified management and monitoring

And with incredibly careful planning, strategic design, effective implementation and with the right support, the capabilities and accelerated outcomes of migrating your HPC systems to the Cloud can lead to truly accelerated breakthroughs and drug discovery.

But with this level of promise and performance, comes challenges and caveats that require strategic consideration throughout all phases of your supercomputing and HPC development, migration and management.

So, before you commence your HPC Migration from on-premise data centers or traditional HPC clusters to the Cloud, here are some key considerations to keep in mind throughout your planning phase.

1. Assess & Understand Your Legacy HPC Environment

Building a comprehensive migration plan and strategy from inception is necessary for optimization and sustainable outcomes. A proper assessment includes an evaluation of the current state of your legacy hardware, software, and the data resources available for use, as well as the system’s capabilities, reliability, scalability, and flexibility, prioritizing security and maintenance of the system.

Gaining a deep and thorough understanding of your current infrastructure and computing environment will help identify technical constraints or bottlenecks that exist, and inform the order that might be necessary for migration. And that level of insight can streamline and circumvent major, arguably avoidable, hurdles that your organization might face.

2. Determine the Right Cloud Provider and Tooling

Determining the right HPC Cloud provider for your organization can be a complex process, but an irrefutable critical one. In fact, your entire computing environment depends on it. It involves researching the available options, comparing features and services, and evaluating cost, reputation and performance.

Amazon Web Service, Microsoft Azure, and Google Cloud – to name just the three biggest – offer storage and Cloud computing services that drive accelerated innovation for companies by offering fast networking and virtually unlimited infrastructure to store and manage massive data sets the computing power required to analyze it. Ultimately, many vendors offer different types of cloud infrastructure that run large, complex simulations and deep learning workloads in the cloud, and it is important to first select the one that best meets the needs of your unique HPC workloads between public cloud, private cloud, or hybrid cloud infrastructure.

3. Plan for the Right Design & Deployment

In order to effectively plan for a HPC Migration in the Cloud, it is important to clearly define the objectives, determine the requirements and constraints, identify the expected outcomes, and a timeline for the project.

From a more technical perspective, it is important to consider the application’s specific requirements and the inherent capabilities including storage requirements, memory capacity, and other components that may be needed to run the application. If a workload requires a particular operating system, for example, then it should be chosen accordingly.

Finally, it is important to understand the networking and security requirements of the application before working through the design, and definitely the deployment phase, of your HPC Migration.

The HPC Migration Journey Begins Here…

By properly considering all of these factors, it is possible to effectively plan for your organization’s HPC migration and its ability to leverage the power of supercomputing in drug discovery.

Assuming your plan is comprehensive, effective and sustainable, implementing your HPC migration plan is ultimately still a massive undertaking, particularly for research IT teams likely already overstretched or for an existing Bio-IT vendor lacking specialized knowledge and skills.

So, if your team is ready to take the leap and begin your HPC migration, get in touch with our team today.

The Next Phase of Your HPC Migration in the Cloud

A HPC migration to the Cloud can be an incredibly complex process, but with strategic planning and design, effective implementation and with the right support, your team will be well on their way to sustainable success. Click below and get in touch with our team to learn more about our comprehensive HPC Migration services that support all phases of your HPC migration journey, regardless of which stage you are in.

Learn the key considerations for evaluating and selecting the right application for your Cloud-environment.

Good software means faster work for drug research and development, particularly concerning proteins. Proteins serve as the basis for many treatments, and learning more about their structures can accelerate the development of new treatments and medications.

With more software now infusing an artificial intelligence element, researchers expect to significantly streamline their work and revolutionize the drug industry. When it comes to protein folding software, two names have become industry frontrunners: AlphaFold and Openfold.

Learn the differences between the two programs, including insights into how RCH is supporting and informing our customers about the strategic benefits the AlphaFold and Openfold applications can offer based on their environment, priorities and objectives.

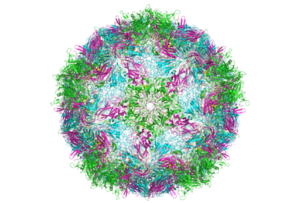

About AlphaFold2

Developed by DeepMind and EMBL’s European Bioinformatics Institute, AlphaFold2 uses AI technology to predict a protein’s 3D structure based on its amino acid sequence. Its structure database is hosted on Google Cloud Storage and is free to access and use.

The newer model, AlphaFold 2, won the CASP competition in November 2020, having achieved more accurate results than any other entry. AlphaFold2 scored above 90 for more than two-thirds of the proteins in CASP’s global distance test, which measures whether the computational-predicted structure mirrors the lab-determined structure.

To date, there are more than 200 million known proteins, each one with a unique 3D shape. AlphaFold2 aims to simplify the once-time-consuming and expensive process of modeling these proteins. Its speed and accuracy are accelerating research and development in nearly every area of biology. By doing so, scientists will be better able to tackle diseases, discover new medicines and cures, and understand more about life itself.

Exploring Openfold Protein Folding Software

Another player in the protein software space, Openfold, is PyTorch’s reproduction of Deepmind’s AlphaFold. Founded by three Seattle biotech companies (Cyrus Biotechnology, Outpace Bio, and Arzeda), the team aims to support open-source development in the protein folding software space, which is registered on AWS. The project is part of the nonprofit organization Open Molecular Software Foundation and has received support from the AWS Open Data Sponsorship Program.

Despite being more of a newcomer to the scene, Openfold is quickly turning heads with its open source model and more “completeness” compared to AlphaFold. In fact, it has been billed as a faster and more powerful version than its predecessor.

Like AlphaFold, Openfold is designed to streamline the process of discovering how proteins fold in and around on themselves, but possibly at a higher rate and more comprehensively than its predecessor. The model has undergone more than 100,000 hours of training on NVIDIA A100 Tensor Core GPUs, with the first 3,000 hours boasting 90%+ final accuracy.

AlphaFold vs. Openfold: Our Perspective

Despite Openfold being a reproduction of AlphaFold, there are several key differences between the two.

AlphaFold2 and Openfold boast similar accuracy ratings, but Openfold may have a slight advantage. Openfold’s interface is also about twice as fast as that of AlphaFold when modeling short proteins. For long protein strands, the speed advantage is minimal.

Openfold’s optimized memory usage allows it to handle much longer protein sequences—up to 4,600 residues on a single 40GB A100.

One of the clearest differences between AlphaFold2 and Openfold is that Openfold is trainable. This makes it valuable for our customers in niche or specialized research, a capability that AlphaFold lacks.

Key Use Cases from Our Customers

Both AlphaFold and Openfold have offered game-changing functionality for our customers’ drug research and development. That’s why many of the organization’s we’ve supported haveeven considered a hybrid approach rather than making an either/or decision.

Both protein folding software can be deployed across a variety of use cases, including:

New Drug Discovery

The speed and accuracy with which protein folding software can model protein strands make it a powerful tool in new drug development, particularly for diseases that have largely been neglected. These illnesses often disproportionately affect individuals in developing countries. Examples include parasitic diseases, such as Chagas disease or leishmaniasis.

Combating Antibiotic Resistance

As the usage of antibiotics continues to rise, so does the risk of individuals developing antibiotic resistance. Previous data from the CDC shows that nearly one in three prescriptions for antibiotics is unnecessary. It’s estimated that antibiotic resistance costs the U.S. economy nearly $55 billion every year in healthcare and productivity losses.

What’s more, when people become resistant to antibiotics, it leaves the door wide open for the creation of “superbugs.” Since these bugs cannot be killed with typical antibiotics, illnesses can become more severe.

Professionals from the University of Colorado, Boulder, are putting AlphaFold to the test in learning more about proteins involved in antibiotic resistance. The protein folding software is helping researchers identify protein structures that they could confirm via crystallography.

Vaccine Development

Learning more about protein structures is proving useful in developing new vaccines, such as a multi-agency collaboration on a new malaria vaccine. The WHO endorsed the first malaria vaccine in 2021. However, researchers at the University of Oxford and the National Institute of Allergy and Infectious Diseases are working together to create a more effective version that better prevents transmission.

Using AlphaFold and crystallography, the two agencies identified the first complete structure of the protein Pfs48/45. This breakthrough could pave the way for future vaccine developments.

Learning More About Genetic Variations

Genetics has long fascinated scientists and may hold the key to learning more about general health, predisposition to diseases, and other traits. A professor at ETH Zurich is using AlphaFold to learn more about how a person’s health may change over time or what traits they will exhibit based on specific mutations in their DNA.

AlphaFold has proven useful in reviewing proteins in different species over time, though the accuracy diminishes the further back in time the proteins are reviewed. Seeing how proteins evolve over time can help researchers predict how a person’s traits might change in the future.

How RCH Solutions Can Help

Selecting protein folding software for your research facility is easier with a trusted partner like RCH solutions. Not only can we inform the selection process, but we also provide support in implementing new solutions. We’ll work with you to uncover your greatest needs and priorities and align the selection process with your end goals with budget in mind.

Contact us to learn how RCH Solutions can help.

Sources:

https://www.nature.com/articles/d41586-022-00997-5

https://www.deepmind.com/research/highlighted-research/alphafold

https://www.drugdiscoverytrends.com/7-ways-deepmind-alphafold-used-life-sciences/

https://www.cdc.gov/media/releases/2016/p0503-unnecessary-prescriptions.html

Cryo-Em brings a wealth of potential to drug research. But first, you’ll need to build an infrastructure to support large-scale data movement.

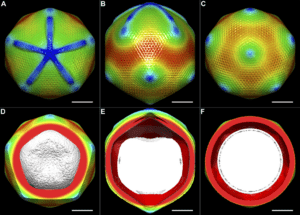

The 2017 Nobel prize in chemistry marked a new era for scientific research. Three scientists earned the honor with their introduction of cryo-electron microscopy—an instrument that delivers high-resolution imagery of molecule structures. With a better view of nucleic acids, proteins, and other biomolecules, new doors have opened for scientists to discover and develop new medications.

However, implementing cryo-electron microscopy isn’t without its challenges. Most notably, the instrument captures very large datasets that require unique considerations in terms of where they’re stored and how they’re used. The level of complexity and the distinct challenges cryo-EM presents requires the support of a highly experienced Bio-IT partner, like RCH, who are actively supporting large and emerging organizations’ with their cryo-EM implementation and management. But let’s jump into the basics first.

How Our Customers Are Using Cryo-Electron Microscopy

Cryo-electron microscopy (cryo-EM) is revolutionizing biology and chemistry. Our customers are using it to analyze the structures of proteins and other biomolecules with a greater degree of accuracy and speed compared to other methods.

In the past, scientists have used X-ray diffraction to get high-resolution images of molecules. But in order to receive these images, the molecules first need to be crystallized. This poses two problems: many proteins won’t crystallize at all. And in those that do, the crystallization can often change the structure of the molecule, which means the imagery won’t be accurate.

Cryo-EM provides a better alternative because it doesn’t require crystallization. What’s more, scientists can gain a clearer view of how molecules move and interact with each other—something that’s extremely hard to do using crystallization.

Cryo-EM can also study larger proteins, complexes of molecules, and membrane-bound receptors. Using NMR to achieve the same results is challenging, as nuclear magnetic resonance (NMR) is typically limited to smaller proteins.

Because cryo-EM can give such detailed, accurate images of biomolecules, its use is being explored in the field of drug discovery and development. However, given its $5 million price tag and complex data outputs, it’s essential for labs considering cryo-EM to first have the proper infrastructure in place, including expert support, to avoid it becoming a sunken cost.

The Challenges We’re Seeing With the Implementation of Cryo-EM

Introducing cryo-EM to your laboratory can bring excitement to your team and a wealth of potential to your organization. However, it’s not a decision to make lightly, nor is it one you should make without consultation with strategic vendors actively working in the cryo-EM space, like RCH

The biggest challenge labs face is the sheer amount of data they need to be prepared to manage. The instruments capture very large datasets that require ample storage, access controls, bandwidth, and the ability to organize and use the data.

The instruments themselves bear a high price tag, and adding the appropriate infrastructure increases that cost. The tools also require ongoing maintenance.

There’s also the consideration of upskilling your team to opera te and troubleshoot the cryo-EM equipment. Given the newness of the technology, most in-house teams simply don’t have all of the required skills to manage the multiple variables, nor are they likely to have much (or any) experience working on cryo-EM projects.

Biologists are no strangers to undergoing training, so consider this learning curve just a part of professional development. However, combined with learning how to operate the equipment AND make sense of the data you collect, it’s clear that the learning curve is quite steep. It may take more training and testing than the average implementation project to feel confident in using the equipment.

For these reasons and more, engaging a partner like RCH that can support your firm from the inception of its cryo-EM implementation ensures critical missteps are circumvented which ultimately creates more sustainable and future-proof workflows, discovery and outcomes.With the challenges properly addressed from the start, the promises that cryo-EM holds are worth the extra time and effort it takes to implement it.

How to Create a Foundational Infrastructure for Cryo-EM Technology

As you consider your options for introducing Cryo-EM technology, one of your priorities should be to create an ecosystem in which cryo-EM can thrive in a cloud-first, compute forward approach. Setting the stage for success, and ensuring you are bringing the compute to the data from inception, can help you reap the most rewards and use your investment wisely.

Here are some of the top considerations for your infrastructure:

- Network Bandwidth

One early study of cryo-EM found that each microscope outputs about 500 GB of data per day. Higher bandwidth can help streamline data processing by increasing download speeds so that data can be more quickly reviewed and used. - Proximity to and Capacity of Your Data Center

Cryo-EM databases are becoming more numerous and growing in size and scope. The largest data set in the Electron Microscopy Public Image Archive (EMPIAR) is 12.4TB, while the median data set is about 2TB. Researchers expect these massive data sets to become the norm for cryo-EM, which means you need to ensure your data center is prepared to handle a growing load of data. This applies to both cloud-first organizations and those with hybrid data storage models. - Integration with High-Performance Computing

Integrating high-performance computing (HPC) with your cryo-EM environment ensures you can take advantage of the scope and depth of the data created. Scientists will be churning through massive piles of data and turning them into 3D models, which will take exceptional computing power. - Having the Right Tools in Place

To use cryo-EM effectively, you’ll need to complement your instruments with other tools and software. For example, CryoSPARC is the most common software that’s purpose-built for cryo-EM technology. It has configured and optimized the workflows specifically for research and drug discovery. - Availability and Level of Expertise

Because cryo-EM is still relatively new, organizations must decide how to gain the expertise they need to use it to its full potential. This could take several different forms, including hiring consultants, investing in internal knowledge development, and tapping into online resources.

How RCH Solutions Can Help You Prepare for Cryo-EM

Implementing cryo-EM is an extensive and costly process, but laboratories can mitigate these and other challenges with the right guidance. It starts with knowing your options and taking all costs and possibilities into account.

Cryo-EM is the new frontier in drug discovery, and RCH Solutions is here to help you remain on the cutting edge of it. We provide tactical and strategic support in developing a cryo-EM infrastructure that will help you generate a return on investment.

Contact us today to learn more.

Sources:

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7096719/

https://www.nature.com/articles/d41586-020-00341-9

https://www.gatan.com/techniques/cryo-em

https://www.chemistryworld.com/news/explainer-what-is-cryo-electron-microscopy/3008091.article

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6067001/

In Life Sciences, and medical fields in particular, there is a premium on expertise and the role of a specialist. When it comes to scientists, researchers, and doctors, even a single high-performer who brings advanced knowledge in their field often contributes more value than a few average generalists who may only have peripheral knowledge. Despite this premium placed on specialization or top-talent as an industry norm, many life science organizations don’t always follow the same measure when sourcing vendors or partners, particularly those in the IT space.

And that’s a mis-step. Here’s why.

Why “A” Talent Matters

I’ve seen far too many organizations that had, or still have, the above strategy, and also many that focus on acquiring and retaining top talent. The difference? The former experienced slow adoption which stalled outcomes which often had major impacts to their short and long term objectives. The latter propelled their outcomes out of the gates, circumventing cripping mistakes along the way. For this reason and more, I’m a big believer in attracting and retaining only “A” talent. The best talent and the top performers (Quality) will always outshine and out deliver a bunch of average ones. Most often, those individuals are inherently motivated and engaged, and when put in an environment where their skills are both nurtured and challenged, they thrive.

Why Expertise Prevails

While low-cost IT service providers with deep rosters may similarly be able to throw a greater number of people at problems, than their smaller, boutique counterparts, often the outcome is simply more people and more problems. Instead, life science teams should aim to follow their R&D talent acquisition processes and focus on value and what it will take to achieve the best outcomes in this space. Most often, it’s not about quantity of support/advice/execution resources—but about quality.

Why Our Customers Choose RCH

Our customers are like minded and also employ top talent, which is why they value RCH—we consistently service them with the best. While some organizations feel that throwing bodies (Quantity) at a problem is one answer, often one for optics, RCH does not. We never have. Sometimes you can get by with a generalist, however, in our industry, we have found that our customers require and deserve specialists. The outcomes are more successful. The results are what they seek— Seamless transformation.

In most cases, we are engaged with a customer who has employed the services of a very large professional services or system integration firm. Increasingly, those customers are turning to RCH to deliver on projects typically reserved for those large, expensive, process-laden companies. The reason is simple. There is much to be said for a focused, agile and proven company.

Why Many Firms Don’t Restrategize

So why do organizations continue to complain but rely on companies such as these? The answer has become clear—risk aversion. But the outcomes of that reliance are typically just increased costs, missed deadlines or major strategic adjustments later on – or all of the above. But why not choose an alternative strategy from inception? I’m not suggesting turning over all business to a smaller organization. But, how about a few? How about those that require proven focus, expertise and the track record of delivery? I wrote a piece last year on the risk of mistaking “static for safe,” and stifling innovation in the process. The message still holds true.

We all know that scientific research is well on its way to becoming, if not already, a multi-disciplinary, highly technical process that requires diverse and cross functional teams to work together in new ways. Engaging a quality Scientific Computing partner that matches that expertise with only “A” talent, with the specialized skills, service model and experience to meet research needs can be a difference-maker in the success of a firm’s research initiatives.

My take? Quality trumps quantity—always in all ways. Choose a scientific computing partner whose services reflect the specialized IT needs of your scientific initiatives and can deliver robust, consistent results. Get in touch with me below to learn more.

Data science has earned a prominent place on the front lines of precision medicine – the ability to target treatments to the specific physiological makeup of an individual’s disease. As cloud computing services and open-source big data have accelerated the digital transformation, small, agile research labs all over the world can engage in development of new drug therapies and other innovations.

Previously, the necessary open-source databases and high-throughput sequencing technologies were accessible only by large research centers with the necessary processing power. In the evolving big data landscape, startup and emerging biopharma organizations have a unique opportunity to make valuable discoveries in this space.

The drive for real-world data

Through big data, researchers can connect with previously untold volumes of biological data. They can harness the processing power to manage and analyze this information to detect disease markers and otherwise understand how we can develop treatments targeted to the individual patient. Genomic data alone will likely exceed 40 exabytes by 2025 according to 2015 projections published by the Public Library of Science journal Biology. As data volume increases, its accessibility to emerging researchers improves as the cost of big data technologies decreases.

A recent report from Accenture highlights the importance of big data in downstream medicine, specifically oncology. Among surveyed oncologists, 65% said they want to work with pharmaceutical reps who can fluently discuss real-world data, while 51% said they expect they will need to do so in the future.

The application of artificial intelligence in precision medicine relies on massive databases the software can process and analyze to predict future occurrences. With AI, your teams can quickly assess the validity of data and connect with decision support software that can guide the next research phase. You can find links and trends in voluminous data sets that wouldn’t necessarily be evident in smaller studies.

Applications of precision medicine

Among the oncologists Accenture surveyed, the most common applications for precision medicine included matching drug therapies to patients’ gene alterations, gene sequencing, liquid biopsy, and clinical decision support. In one example of the power of big data for personalized care, the Cleveland Clinic Brain Study is reviewing two decades of brain data from 200,000 healthy individuals to look for biomarkers that could potentially aid in prevention and treatment.

AI is also used to create new designs for clinical trials. These programs can identify possible study participants who have a specific gene mutation or meet other granular criteria much faster than a team of researchers could determine this information and gather a group of the necessary size.

A study published in the journal Cancer Treatment and Research Communications illustrates the impact of big data on cancer treatment modalities. The research team used AI to mine National Cancer Institute medical records and find commonalities that may influence treatment outcomes. They determined that taking certain antidepressant medications correlated with longer survival rates among the patients included in the dataset, opening the door for targeted research on those drugs as potential lung cancer therapies.

Other common precision medicine applications of big data include:

- New population-level interventions based on socioeconomic, geographic, and demographic factors that influence health status and disease risk

- Delivery of enhanced care value by providing targeted diagnoses and treatments to the appropriate patients

- Flagging adverse reactions to treatments

- Detection of the underlying cause of illness through data mining

- Human genomics decoding with technologies such as genome-wide association studies and next-generation sequencing software programs

These examples only scratch the surface of the endless research and development possibilities big data unlocks for start-ups in the biopharma sector. Consult with the team at RCH Solutions to explore custom AI applications and other innovations for your lab, including scalable cloud services for growing biotech and pharma research organizations.

Do You Need Support with Your Cloud Strategy?

Cloud services are swiftly becoming standard for those looking to create an IT strategy that is both scalable and elastic. But when it comes time to implement that strategy—particularly for those working in life sciences R&D—there are a number of unique combinations of services to consider.

Here is a checklist of key areas to examine when deciding if you need expert support with your Cloud strategy.

- Understand the Scope of Your Project

Just as critical as knowing what should be in the cloud is knowing what should not be. The act of mapping out the on-premise vs. cloud-based solutions in your strategy will help demonstrate exactly what your needs are and where some help may be beneficial. - Map Out Your Integration Points

Speaking of on-premise vs. in the Cloud, do you have an integration strategy for getting cloud solutions talking to each other as well as to on-premise solutions? - Does Your Staff Match Your Needs?

When needs change on the fly, often your staff needs to adjust. However, those adjustments are not always so easily implemented, which can lead to gaps. So when creating your cloud strategy, ensure you have the right team to help understand the capacity, uptime and security requirements unique to a cloud deployment.

Check our free eBook, Cloud Infrastructure Takes Research Computing to New Heights, to help uncover the best cloud approach for your team. Download Now

- Do Your Solutions Meet Your Security Standards?

There are more than enough examples to show the importance of data security. It’s no longer enough however, to understand just your own data security needs. You now must know the risk management and data security policies of providers as well. - Don’t Forget About Data

Life Sciences is awash with data and that is a good thing. But all this data does have consequences, including within your cloud strategy so ensure your approach can handle all your bandwidth needs. - Agree on a Timeline

Finally, it is important to know the timeline of your needs and determine whether or not your team can achieve your goals. After all, the right solution is only effective if you have it at the right time. That means it is imperative you have the capacity and resources to meet your time-based goals.

Using RCH Solutions to Implement the Right Solution with Confidence

Leveraging the Cloud to meet the complex needs of scientific research workflows requires a uniquely high level of ingenuity and experience that is not always readily available to every business. Thankfully, our Cloud Managed Service solution can help. Steeped in more than 30 years of experience, it is based on a process to uncover, explore, and help define the strategies and tactics that align with your unique needs and goals.

We support all the Cloud platforms you would expect, such as AWS and others, and enjoy partner-level status with many major Cloud providers. Speak with us today to see how we can help deliver objective advice and support on the solution most suitable for your needs.

Studied benefits of Cloud computing in the biotech and pharma fields.

Cloud computing has become one of the most common investments in the pharmaceutical and biotech sectors. If your research and development teams don’t have the processing power to keep up with the deluge of available data for drug discovery and other applications, you’ve likely looked into the feasibility of a digital transformation.

Real-world research reveals these examples that highlight the incredible effects of Cloud-based computing environments for start-up and growing biopharma companies.

Competitive Advantage

As more competitors move to the Cloud, adopting this agile approach saves your organization from lagging behind. Consider these statistics:

- According to a February 2022 report in Pharmaceutical Technology, keywords related to Cloud computing increased by 50% between the second and third quarters of 2021. What’s more, such mentions increased by nearly 150% over the five-year period from 2016 to 2021.

- An October 2021 McKinsey & Company report indicated that 16 of the top 20 pharmaceutical companies have referenced the Cloud in recent press releases.

- As far back as 2020, a PwC survey found that 60% of execs in pharma had either already invested in Cloud tech or had plans for this transition underway.

Accelerated Drug Discovery

In one example cited by McKinsey, Moderna’s first potential COVID-19 vaccine entered clinical trials just 42 days after virus sequencing. CEO Stéphane Bancel credited Cloud technology, that enables scalable and flexible access to droves of existing data and as Bancel put it, doesn’t require you “to reinvent anything,” for this unprecedented turnaround time.

Enhanced User Experience

Both employees and customers prefer to work with brands that show a certain level of digital fluency. In the survey by PwC cited above, 42% of health services and pharma leaders reported that better UX was the key priority for Cloud investment. Most participants – 91% – predicted that this level of patient engagement will improve individual ability to manage chronic disease that require medication.

Rapid Scaling Capabilities

Cloud computing platforms can be almost instantly scaled to fit the needs of expanding companies in pharma and biotech. Teams can rapidly increase the capacity of these systems to support new products and initiatives without the investment required to scale traditional IT frameworks. For example, the McKinsey study estimates that companies can reduce the expense associated with establishing a new geographic location by up to 50% by using a Cloud platform.

Are you ready to transform organizational efficiency by shifting your biopharmaceutical lab to a Cloud-based environment? Connect with RCH today to learn how we support our customers in the Cloud with tools that facilitate smart, effective design and implementation of an extendible, scalable Cloud platform customized for your organizational objectives.

References

https://www.mckinsey.com/industries/life-sciences/our-insights/the-case-for-Cloud-in-life-sciences

https://www.pharmaceutical-technology.com/dashboards/filings/Cloud-computing-gains-momentum-in-pharma-filings-with-a-50-increase-in-q3-2021/

https://www.pwc.com/us/en/services/consulting/fit-for-growth/Cloud-transformation/pharmaceutical-life-sciences.html

Prepare for the next generation of R&D innovation.

As biotech and pharmaceutical start-ups experience accelerated growth, they often collide with computing challenges as the existing infrastructure struggles to support the increasingly complex compute needs of a thriving research and development organization.

By anticipating the need to scale the computing environment in the early stages of action for your pharma or biotech enterprise, you can shield your start-up from the impact of these five common concerns associated with rapid expansion.

Insufficient storage space

Life sciences companies conducting R&D particularly have to reckon with an incredible amount of data. Research published by the Journal of the American Medical Association indicates that each organization in this sector could easily generate ten terabytes of data daily, or about a million phone books’ worth of data. Start-ups without a plan in place to handle that volume of information will quickly overwhelm their computing environments. Forbes notes that companies must address both the cost of storing several copies of necessary data and the need for a comprehensive data management strategy to streamline and enhance access to historical information.

Collaboration and access issues

As demonstrated by the COVID-19 pandemic and its aftermath, remote work has become essential across industries, including biotech and pharma. In addition, global collaborations are more common than ever before, emphasizing the need for streamlined access and connectivity from anywhere. Next-generation cloud-based environments allow you to optimize access and automate processes to facilitate collaboration, including but not limited to supply chain, production, and sales workflows.

Ineffective data security

Security threats compromise the invaluable intellectual property of your biotech or pharmaceutical start-up. As the team scales the company’s ability to process and analyze data, it proportionally increases the likelihood of a data breach. The world’s top 20 pharma companies by market sector experienced more than 9,000 breaches from January 2018 to September 2021, according to a Constella study reported by FiercePharma. Nearly two-thirds of these incidents occurred in the final nine months of the research period.

If your organization accesses and uses patient information, you are also creating exposure to costly HIPAA violations. Consider investing in a next-generation tech platform that provides proactive data security, with advanced measures like intelligent system integrations and new methods to validate and verify access requests.

Limited data processing power

As biotech and pharmaceutical companies increasingly invest in artificial intelligence, organizations without the infrastructure to implement next-generation analysis and processing tools will be at a significant disadvantage. AI and other types of machine learning dramatically reduce the time it takes to sift through seemingly endless data to find potential drug matches for disease states, understand mechanisms of action, and even predict possible side effects for drugs still in development.

Last year, The Guardian reported that 90% of large global pharmaceutical companies invested in AI in 2020, and most of their smaller counterparts have quickly followed suit. The Forbes article cited above projected AI spending of $2.45 billion in the biotech and pharmaceutical industries by 2025, an increase of nearly 430% over 2019 numbers.

Modernization and scale

Cloud-first environments can scale in tandem with your organization’s accelerated growth more easily than an on-prem server system. Whether you need to support expanding geographic locations or expanding performance needs, the cloud compute space can flex to accommodate an adolescent biotech company’s coming of age.

When your organization commits to the cloud platform, place best practices at the forefront of implementation. A framework based on data fidelity will prevent future access, collaboration and security issues. The cloud relies on infrastructure as code, a system that maintains stability through every phase of iterative growth.

Concerns about compliance

McKinsey & Company identified the need for better-quality assurance measures in response to ever-increasing regulatory scrutiny nearly ten years ago in its 2014 report “Rapid growth in biopharma: Challenges and opportunities.” Since that time, the demands of domestic agencies such as the Food and Drug Administration have been compounded by the need to comply with numerous global regulations and quality benchmarks. Efficient, robust data processes can help adolescent biopharma companies keep up with these voluminous and constantly evolving requirements.

With a keen understanding of these looming challenges, research teams can leverage smart IT partnerships and emerging technologies in response. The 2014 McKinsey report correctly predicted that to successfully address the tech challenges of growth, organizations must expand capacity to adopt new technologies and take risks in terms of capital expenditures to scale the computing environment. Taking advantage of existing cloud platforms with innovative tools designed specifically for R&D can save your team the time and money of building a brand-new infrastructure for your tech needs.

Part Five in a Five-Part Series for Life Sciences Researchers and IT Professionals

Because scientific research is increasingly becoming a multi-disciplinary process that requires researcher scientists, data scientists and technical engineers to work together in new ways, engaging an IT partner that has the specialized skills, service model and experience to meet your compute environment needs can be a difference-maker in the success of your research initiatives.

If you’re unsure what specifically to look for as you evaluate your current partners, you’ve come to the right place! In this five-part blog series, we’ve provided a range of considerations important to securing a partner that will not only adequately support your research compute environment needs, but also help you leverage innovation to drive greater value out of your research efforts. Those considerations and qualities include:

- Unique Life Sciences Specialization and Mastery

- The Ability to Bridge the Gap Between Science and IT

- A High Level of Adaptability

- A Service Model That Fits Research Goals

In this last installment of our 5 part series, we’ll cover one of the most vital considerations when choosing your IT partner: Dedication and Accountability.

You’re More than A Service Ticket

Working with any vendor requires dedication and accountability from both parties, but especially in the Life Sciences R&D space where project goals and needs can shift quickly and with considerable impact.

Deploying the proper resources necessary to meet your goals requires a partner who is proactive, rather than reactive, and who brings a deep understanding and vested interest in your project outcomes (if you recall, this is a critical reason why a service model based on SLA’s rather than results can be problematic).

Importantly, when scientific computing providers align themselves with their customers’ research goals, it changes the nature of their relationship. Not only will your team have a reliable resource to help troubleshoot obstacles and push through roadblocks, it will also have a trusted advisor to provide strategic guidance and advice on how to accomplish your goals in the best way for your needs (and ideally, help you avoid issues before they surface). And if there is a challenge that must be overcome? A vested partner will demonstrate a sense of responsibility and urgency to resolve it expeditiously and optimally, rather than simply getting it done or worse—pointing the finger elsewhere.

It’s this combination of knowledge, experience and commitment that will make a tangible difference in the value of your relationship.

Don’t Compromise

Now you have all 5 considerations, it’s time to put them into practice. Choose a scientific computing partner whose services reflect the specialized IT needs of your scientific initiatives and can deliver robust, consistent results.

RCH Solutions Is Here to Help You Succeed

RCH has long been a provider of specialized computing services exclusively to the Life Sciences. For more than 30 years, our team has been called upon to help biotechs and pharmas across the globe architect, implement, optimize and support compute environments tasked with driving performance for scientific research teams. Find out how RCH can help support your research team and enable faster, more efficient scientific discoveries, by getting in touch with our team here.